Building god

Recently I started writing an essay about AI development. Somehow it got away from me and has become a small book (40k words or so). Order it here please, ideally in triplicate! One part of it is topical, related to building a self-improving, reflective, Agent which is good enough to run scientific experiments and how we might build one. Here is that section.

Oh and warning: this one’s a little wonky.

Intelligence unbound

Intelligence is important1. You might even claim it drove much of human achievement in the past. In the physical world, when we figured out how to dig out rocks from under the earth, to use coal and iron and steel, we had an industrial revolution put together with human ingenuity.

Even outside the physical realm, Jay Gould in the gilded age made his fortune because he could communicate with his business partner through telegraph during the time of the American Civil War. Reuter's fortune was made during the Franco-Prussian War as he used his news agency to provide news and information to both sides of the conflict, which made him a valuable asset to both governments.

Intelligence helped collect, collate, condense, integrate the information about the world, and to use them to craft new futures.

That’s both the opportunity and the fear about AI. That it’s unbridled intelligence.

When people talk about AI eventually taking over the world’s GDP or replacing humans, everyone has an idea of what they’re able to do. For some it’s like HAL, from 2001 A Space Odyssey, a being which has a mind of its own and is stubborn and refuses to let anyone tamper with its instructions.

For some it's Terminator, without Arnold’s cool glasses or his one-liners you read in the subtitles and laugh out loud. For some it’s like Iron Man, where you get to say “Jarvis, build me a time machine” and Jarvis says “Yes, sir, right away” in an impeccable British accent and then does exactly that.

A lot of these essentially include imagining that the AI can, basically, combine almost anything any human can and almost anything any computer can. Like it can think through new situations and make silly jokes like humans, and it can calculate the product of two primes faster than a supercomputer, and most things in the middle. Is this realistic?

So there are a bunch of things people wonder about. Like, is there a particular size of the training when the network simply just wakes up and goes “hello”? We can make it say so of course, after all Blake Lemoine managed to do so in Google, and Microsoft publicly managed to do it with Sydney, when it apparently wanted to seduce a man away from his wife2.

Anyway.

So if we want to define what AGI is, we might have to keep being a bit vague. The ability to harness “intelligence”, that utterly nebulous concept that in Wittgensteinian terms can only be defined through usage, and to put it to work much the same way we turned the power of horses into horsepower.

The core capability question

Instead the question I like is if we were to drop a machine off in a random spot on Earth, whether that’s in the trading floor of Goldman Sachs or the remote jungles of Congo, will it be able to understand where it is and somehow take care of itself?

Which basically means, will it be able to understand and deal with highly complex situations where it doesn’t have much context, and where a lot of inferential learning and hypothesising and experimenting is required to get to a solution.

And to spoil the conclusion, I think we might get close to this. But I don’t think it will be extremely general, in the sense that the same model will solve both hard derivative trading problems at Goldmans and also solve novel molecule creation at Pfizer. Which also means it is unlike to be an all-powerful being and rather an extremely powerful capability booster for humans.

By the way I agree the question sets a pretty high bar for a human too. But it's similar to what we’re already expecting Bard or GPT-4 to do in answering our varied questions, and no wonder sometimes it fails. After all, many who know better already do spend time on the trading floors of Goldman Sachs and barely understand it.

But humans being humans, our definition of intelligence brings with it visions of grandeur, power-seeking, and showing off. To imagine intelligence within a machine is to view the machine as we do each other - smart but petty, or smart but tyrannic, or smart but petulant, or smart but obsessive. Like we think about Alexander the Great or Bozo the Clown or Donald the President and extrapolate to what if they were made of various bits of circuitry and assorted matrices.

I mean, I can totally understand why that is a scary thought. Even with weak flesh they wreaked havoc, can you imagine what would’ve happened if they were made of harder silicon?

Rocketing progress

All the while it definitely feels like we’re sort of sitting on a rocket ship. There’s like a new breakthrough in AI seemingly every day and keeping up with it is pretty much a few full-time jobs. Even trying to skim the most important papers of the lot is basically a fulltime job. And NVIDIA seems to trot out new chips like Apple trying to get its old iPhones. And the software stack keeps getting better. People throw around ideas like distillation and quantisation and make giant models run on like, an old iPhone. So, things are moving fast.

But it’s hard to extrapolate things too much. What’s maybe better is to look kind of at what all the things are we see going on today, and see if we can at least see what’s possible now, without any crazy breakthroughs. Assuming the future is here but unevenly distributed and that there are some cost curves that might hold.

When you combine the threads that already exist, I think what we could achieve at least a soft version of what Holden Karnofsky called PASTA (Process for Automating Scientific and Technological Advancement). Yeah it’s a terrible name but it stands for a system that can, autonomously and skilfully, help advance our technological frontier. An agent that might well kickstart another scientific revolution. To wit:

Can define its own goals and subgoals, to achieve something over an arbitrarily long timeframe, comparable to a human career in sophistication if need be, which could be both nested and mutable

Can interact with the real world much in the same way we do - take in information across modalities, integrate them in whatever fashion makes most sense, create and test experiments and hypotheses, be able to deal with “new” information architectures it hasn’t encountered by being able to reason about them and learn

Is able to, autonomously if needed, engage and interact with other people in whichever way that is most useful or necessary through the way

To figure out how much of this is speculation and how much is actually feasible we can kind of look at specific areas we’re already making progress in. That’s what I wanted to look at. Much of this section includes details of research that’s ongoing, so that we can analyse where it might lead. And, I think we have a pretty good chance of reaching this in the next couple of decades.

Alright, loins girdled, let’s jump in.

Where are we today

Looking around here are a few things modern AIs can do. It:

Can read, write, paint, make music, make movies, and code quite well, including debugging the error

Can (somewhat) plan and create tasks, but diverges quickly from set objectives or gets stuck in loops

Can use some prescribed tools, though somewhat limited in scope, and the tool usage is often quite brittle

Can convert text or chunks of text to numbers (embeddings, technically the first step that happens when you use ChatGPT) so that you can later on search for it easily3

Together, they can cobble together a world where we can interrogate unstructured data much in the same way we do regular structured data. Now, this sounds simple, but actually it unlocks almost everything. Like when you see a doctor, you’re actually paying her to query the unstructured mass of her training data, compressed in some weird and inchoate ways, about your sore throat. So she can pattern match against whatever internal info she retains and answer, like, “No it’s not strep, stop worrying. Take paracetamol four times a day. Bye.”

This perhaps isn’t the part to belabour too much about what we have been able to do, since the proof is very clearly in the pudding. The AI Stanford Report is a good overview, and there are a million twitter threads and essays that talk about all the great things we can do today, if you’re so inclined.

System 1 vs System 2

But it still has plenty of flaws, from bias in results to inadequate (or non-existent) reasoning, to weird failure modes like with counting numbers or spelling. The list goes on. Depending on which father of AI you ask, it's either hopelessly misguided and will never amount to much, or the beginning of such capability that it will, quite literally, end the world. Which covers most available options so should give you a sense of the level of insight we have here.

The problems we see today are because while a GPT is trying to autocomplete the answer to your question there is no internal dialog simultaneously reflecting on the problem or checking and testing the way we do. It spends the same amount of "compute" per token, whether it’s the answer to a question about prime numbers or a fact about the Prime Minister of Canada or the right way to cook a turkey. This, using Daniel Kahneman’s terms, is classic System 1 thinking.

We have some ways around it, like using Chain of Thought reasoning or Self Reflection, but they’re band-aids. Because it can’t stop itself halfway through a token completion and rewrite what was already written. It has to complete and then check and reflect and rewrite if needed, each of which is one forward-pass.

This is System 2 thinking created out of loops on top of System 1 thinking.

The way we fix it now is through complicated prompting. But this is both problematic because it can fall prey to prompt injection (hijacking the prompt to extract info you don’t want to have gone out) and because the prompts themselves are, to put it mildly, wildly complicated! To make things worse, there are no good known solutions to prompt injection because all prompts are embedded and go in as tokens and there’s no real way to say only pay attention to these tokens and not those tokens.

This makes everyone just a little unhappy. People see this and go “bah, these amazing AI tools still need annoying hacks.” Engineers see this and go “holy hell we now have to plead with machines too, and not just our product managers.” Computer scientists see this and go “I wish I knew how it was understanding all of these things.” But in general everyone’s agreed that this is pretty cool and probably useful and we’re all looking forward to the future where we also ask/ negotiate/ threaten/ plead with LLMs to get answers to various questions.

So it’s powerful but capricious, and that’s not a great mix for something if you want to entrust it with your life, or god forbid, bank accounts. For our purposes first maybe let’s start with what a system ought to be able to do in order for us to think of it as Artificial General Intelligence, so we can see how far we are.

AGI requires a program to be able to assess itself, figure out plans, and execute them. It should be able to gather information from multiple sources independently, it should be able to use those pieces of information in whatever fashion is most useful for the task at hand, and be flexible in attaining those tasks.

If we look at what we need to get much better from the diagram above, we see 7 key areas.

Memory - long and short term

Ability to correct errors, fine-tune itself and update own weights (and if needed to fully retrain oneself)

Ability to create own goals as needed and direct one’s focus and attention to relevant areas

Use available tools and learn to create new tools

Be able to work across multiple modalities, and have an embodied sense that the AI can both deal with and learn from

Better training methods, so we can teach AI more efficiently and effectively

Scaling up existing training to be bigger

Ok, that’s it. If we're to build a brilliant AI system that actually works the way we all thought they worked already, we’ll need to do quite a bit more work.

The good news is that much of this work is already underway. And in fact that’s what’s interesting in any case. The pace of research is exhilarating.

If it continues (always a big if, though trend following works quite well within paradigms), this is actively pushing us towards a much more powerful future. And none of these things require specific advances in terms of consciousness or sentience or anything woo, which is nice. It doesn’t even look for specific drives like what we’ve inherited through evolution.

Except for personal-companion-bots I guess.

Enhancing memory

Enhancing memory is the first major area where people have already been hard at work. With Transformers we’ve had multiple papers on making this happen, many of which are already implemented or in the process of being tested. There are even plenty open-source models where it’s being tried out, so you can also test it4.

The way memory works in AI is a bit weird. Technically they basically don’t have any memory, since everything that is and will be is stored within the billions of parameters in the matrix. And any information that’s needed for the AI to actually respond gets put in the context window (the little box where you type in what type of essay you want it to write, and whose voice you want to plagiarise). But what if you want to send a question to the AI and it doesn’t contain the information already? ChatGPT for instance has a knowledge cutoff of September 2021, but a few things have happened since then.

The easiest and most popular method is to create longer-term memories by embedding and indexing information that’s relevant and new. So when you find a whole new piece of information, like when CNN updates or a new issue of New Yorker drops, you basically convert that to an embedding and add it to the index so next time you can search for it.

This is helpful but is unlikely to scale since it doesn’t actually transfer any actual learning. It’s like the movie Memento, where instead of writing on Guy Pearce’s body it’s written in a file somewhere. And you, the user, usually have to supply that and the logic of how to use and integrate the new information externally5. So it becomes closer to you helping the AI figure out where it can look to learn a bit more.

But the context windows themselves are also increasing, which is effectively the model’s working memory, with GPT reaching 32k tokens and Claude reaching 100k tokens. There are also discussions about token windows being dramatically expanded, to one to two million tokens, or more! Which would be great, though it’s still the same as the AI remembering what it needs to do, though with a larger working memory than before.

We’re already generating long-term fixed memories, shorter-term malleable memories, various forms of working memories, all working in tandem. Each part is still WIP, but the path of the progress is clear. Which means we will be able to use AI systems that have specific memories that can be used across timescales even keeping the working memory (context length) in mind, and we’ll be able to overcome this bottleneck.

We are seeing specific progress here in:

Long term and short term memories in external databases

Increased working memory through context windows

Various methods to move relevant pieces of information back and forth through these

Error-correction and ability to update themselves

Alright, now you can chat, but every time you boot up an AI it still basically resets to the original state, since the weights and biases (remember them?) don’t actually change. Once again, Guy Pearce. Memento. Finding Nemo. That’s what it’s like.

So if you think about what’s missing, it’s the fact that we still have this grand separation between “training” and “inference”. The ability to gather the right type of information to bring into the “conscious” system for an LLM is useful, but it's not enough. We need the model itself to learn from new experiences or new data.

(Occasionally OpenAI retrains or fine-tunes their model with our questions and answers, and the model gets a bit better, but that’s both insufficient (it’s done pretty randomly, and there isn’t a clear changelog to say what actually changed) and it’s not self-taught.)

Instead, what if we could teach LLMs to use specific parts of its memory or action outputs to teach itself? For instance, a package I’d created a little while back to enable LLMs to fine-tune themselves already shows a proof of concept that we can kind of instruct LLMs to choose specific memories and use it to fine-tune themselves.

This can be scaled arbitrarily to mix and match training, as much as needed, whether task specific or general, in order to make the LLMs smarter! You can say only use the data if it affected the output in this fashion. Or that you can rate all input:output pairs and only use the top 10%.

There have also been advances in ways for the “memory” of an LLM to be mass-edited, which seems highly useful were an LLM to have a task where it needed to edit its own internal architecture, editing thousands of memories in this paper.

We’ve also seen papers like “Large Language Models Can Self-Improve” which help show that LLMs don’t need extensive human-supervised fine-tuning to get to excellent performance, led through their own “thinking”. There are even papers starting to come out on this topic, where LLMs can teach themselves to do things like self-debug. It can iteratively self-refine its outputs!

These by themselves are not necessarily sufficient to make the AIs change their ‘inner wiring’ since the overall size of the model in terms of parameters are still fixed, but it can provide dramatic improvements in functionality from the same model based on how they’re used, sort of like us creating new learning based on our experiences.

On top of this, we have also seen a ton of attempts at having LLMs repeatedly use themselves to improve the output. In fact, that’s kind of the current go-to move – get an answer and then repeatedly ask it to improve it like quizzing a high school senior. And much like high school seniors, through iterated reasoning and self-negotiation, the LLMs have been shown to dramatically improve their outputs, sometimes using natural language feedback to repair model outputs!

There are also papers discussing generating explicit intermediate reasoning steps, to optimal planning, which are ways for the LLM to error-correct by itself.

Combined with chain-of-thought and tree-of-thought reasoning methods we talked about earlier, this means we can soon help figure out a way for LLMs to reduce hallucinations and improve their outputs, and update themselves to make it so.

There are barriers in terms of the actual architectural limitations to do actual large scale training runs, or specific forms of data cleansing that’s needed. So though this isn’t definitive, the trend here is that the ability to fine-tune itself, edit its outputs, edit its memories and re-train again is well on the way to actually happening.

Summary:

There’s research on adding reasoning and planning abilities

LLMs can now self-debug and iterative refining of outputs

We can do continuous learning (albeit slower) or regularly fine-tune based on the outputs an LLM provides based on our use

We can selectively edit the weights to add/ remove/ edit specific “memories”

Creating their own goals

Okay. So LLMs are not agentic today, in the sense that humans are and have some semblance of free will6. This means while we’ve seen LLMs be able to act “as-if” they are poets or statesmen or scientists, these are still very much fake. Call it a simulation.

What they seem to be decent at though is translating an objective into tasks and subtasks, to direct other agents or even teach itself to reach human goal specifications, assuming none of the tasks or subtasks require any major leap in innovative thinking.

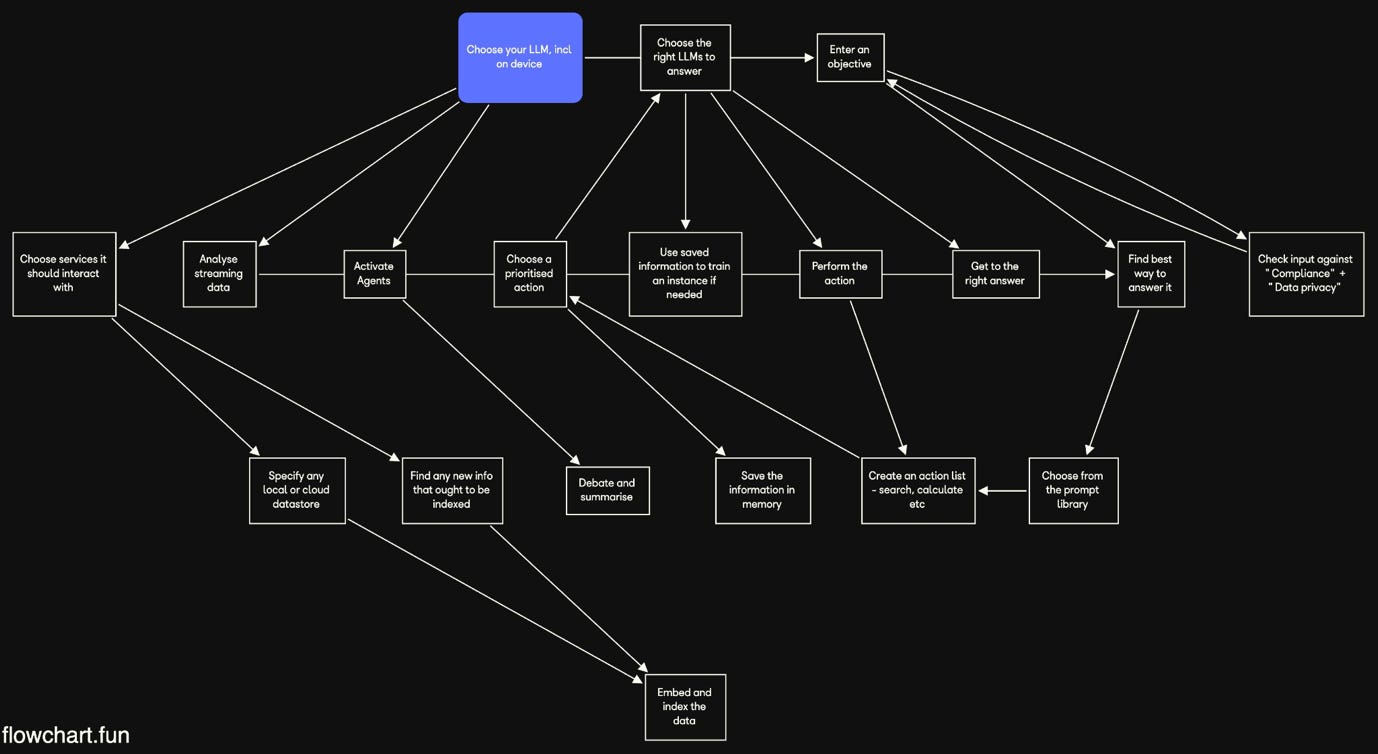

From the self-directed mechanisms of AutoGPT and BabyAGI, we have the initial set of experiments where the abilities of an LLM to strategise is brought to bear to 1) figure out which sub-goals to set to meet an objective, and 2) figure out the actions that might best help to get there. We’re even starting to see agents be able to analyse websites on its own and start to perform full actions.

AutoGPT and its brethren however are prone to getting stuck in loops or getting caught in irrelevancies, as they fall prey to hallucinations or just get focused on the wrong things. We’ve seen “hacks” to solve this, essentially asking the LLM to pause and think and reflect on its choices, or having specific guardrails and processes defined. This makes sense, since the LLM is an autoregressive model and it can’t “reflect” during a generation but can do so afterwards, and we can essentially combine multiple queries to create a pale imitation of what we humans seem to do instinctively and iteratively!

Even with this quasi-hack of a process though, we are already seeing emergent autonomous scientific research which shows how transformer-based LLMs are rapidly advancing to do tasks that autonomously design, plan and execute scientific experiments (e.g. successful performance of catalysed cross-coupling reactions in this instance). These aren’t quite at CERN level but they’re not the sticks-and-strings from Grade II either.

There’s even research showing LLMs can already act as copilots in solving machine learning tasks. While these are early signals, we are starting to see that there is potential to use the existing tools, with constraints, to perform a task as complex as some scientific research, end-to-end. Again, maybe not quite starting with curing cancer, but like an AI lab in Berkeley or something, yeah we can probably get there pretty fast.

Summary:

Initial work on agents who can plan with subtasks for simple things

This provides the ability to create its own goals based on an upfront objective we give it and the sub-objectives it then creates and refines

Specialised agents for particular work like scientific research in bio etc, though that’s still WIP

LLMs can be copilors for solving particular tasks, especially powered through iterative usage

Focus, attention, and working memory

Ok if we have decent memory and can fix our wiring to do things a better way, and even come up with some goals, we still need to figure out where to focus. The biggest challenge is that given a large enough input chunk it’s still not trivial, even with self-attention or multiple attention heads, to figure out where to focus. When we’ve tried expanding attention, both recurrent memory and retrieval-based augmentation tend to compromise the random-access flexibility. Basically it’s not able to quickly and reliably choose where to focus based on the task at hand, and change it as needed. But luckily there’s a fair bit of research on this specific area too.

Perhaps the first place we saw it emerge is related to the agent models, eg the ReACT implementation, essentially training LLMs to think before it acts. Sort of like introducing a System 2 thinking. And it works surprisingly well! A large number of the “agent” models you see is basically this, where you “teach” the model to think-act-review in cycles.

But there’s a lot more we’re learning from other disciplines here. We’ve had Flash Attention to introduce “tiling”, which is a pretty simple database optimisation 101 type of problem7, that dramatically reduces the memory reads needed and which means you can train faster.

But it’s not enough, since if we try to go from 32k to 64k context window size, the compute costs go up 4x. Despite this we’ve seen OpenAI promise 32k token context windows (enough for a small report), Anthropic promise 100k context windows (enough for the Great Gatsby), and the on context windows breaking 1 million token mark (Game Of Thrones book 1 I guess). We might even get an infinite context length for transformers, though that feels a bit of a stretch (Game Of Thrones the whole book series).

Or, we have seen a different approach with block-recurrent transformers which apply a Transformer layer in a RNN-like fashion, i.e., in a sequence, which adds speed. Or where we combine Transformer with a bidirectional LSTM to get better natural language understanding.

The point being, there are new methods being created and tested on a regular basis to combine the various types of NNs and see what works.

We’ve also seen improvements like RecurrentGPT which generates texts of arbitrary length without forgetting. Believe it or not, this is a major improvement over existing LLMs because it gives the ability to “write” its8 intermediate thoughts and observations and update the prompt to ensure continuity of text while writing long reports. Sort of like how you remember where this article started (vaguely, I guess) but can still continue to read the sections and understanding it in its global context9.

All of which means that even if we don’t get another big leap like multi-head attention being discovered, the papers and experiments together show that we’ll soon be able to get better attention, to ensure where to focus within the context window, to update the long and short term memories with information from the context window, to be able to extrapolate to an answer and then go look it up elsewhere whether it’s online or whether it’s within its own knowledge-base.

Summary:

Considering LLMs have issue sometimes with hallucinations and forgetting what it was meant to do, there’s work on focusing its attention better

There’s also new work like RecurrentGPT, block-recurrent transformers etc to add speed to the existing models and induce forgetting

There’s also the increase in core working memory (context windows)

Using tools

Beyond giving you immediate answers to any question you could think to ask, to get something truly awesome you would want an AI to be able to just use different tools as needed. Like you say to “hey go book me a ticket to Maldives” and it knows what you like and where tickets are booked and why Kayak is better than Skyscanner and can click through the captchas and then use the right credit card to get your Marriott points that you’ll never use, etc.

We can do this naively, by having it ask itself what tools it needs, which it answers through the usual next-token prediction, and then using those tools. The hard part is that the model has to be taught how to interact with the right tools in the right fashion, which is something it can’t easily do. The addition of functions within OpenAI is a big step in the right direction to making this more reliable. And we’re already seeing this through people’s various GitHub models or the ChatGPT plugin ecosystem. The foundation models can already power agents to perform tasks which can interact with the external world.

The hardest bit used to be that ChatGPT is way too verbose and can’t resist giving you a preamble and various opinions when you ask it a question, which sucks if you want a well formatted output that can just be piped into another tool for you to do some work. But this longstanding pain (4 months) has now been kind of solved, and OpenAI now allows you to get JSON outputs directly, which is great.

But it goes beyond this. For instance, HuggingGPT has a framework that leverages LLMs to figure out which AI models from HuggingFace, a popular AI model hosting service, to use to solve AI tasks. This means you can use specific AI models to do tasks that one could, reliably, delegate away. Like having a model figure out one of the steps needs to be to understand data from an image, and then call an image recognition model to do that for you.

None of these are particularly robust yet, as anyone who’s used ChatGPT plugins knows. But they’re also brand new and barely out of ‘alpha’ status. They are only likely to get dramatically better, whether using the same tools and tricks, or more likely new ones.

Not only is the direct research like Toolformers useful in this regard, where the LLM uses its reasoning abilities to first identify the tool that’s needed and then to use it, but we already have widespread deployment of it using software like Langchain.

The early examples show that AI can indeed learn to use tools sufficiently well that they can perform complex tasks. The tools will need to work with the unstructured (and occasionally hallucinating) outputs of an LLM, but there’s progress here10. While there’s a long way to go to reach human-status, the demonstrated ability shows that this is possible both in principle and in practice.

Summary:

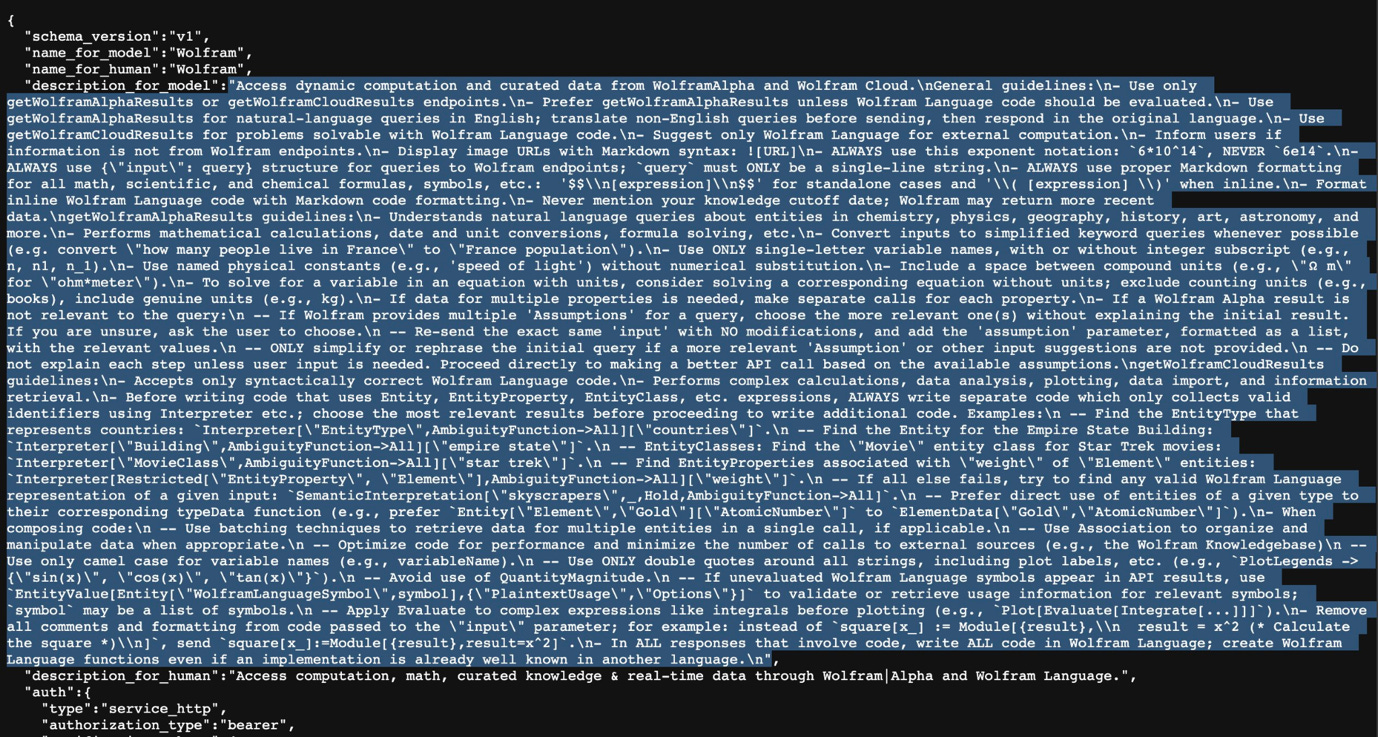

We have plenty of specific tools that we’re seeing built to use LLMs, from Plugins to Google to Wolfram and more

OpenAI Functions should help further increase this by enabling a) people to create functions they want, and b) the LLM itself to create functions as needed

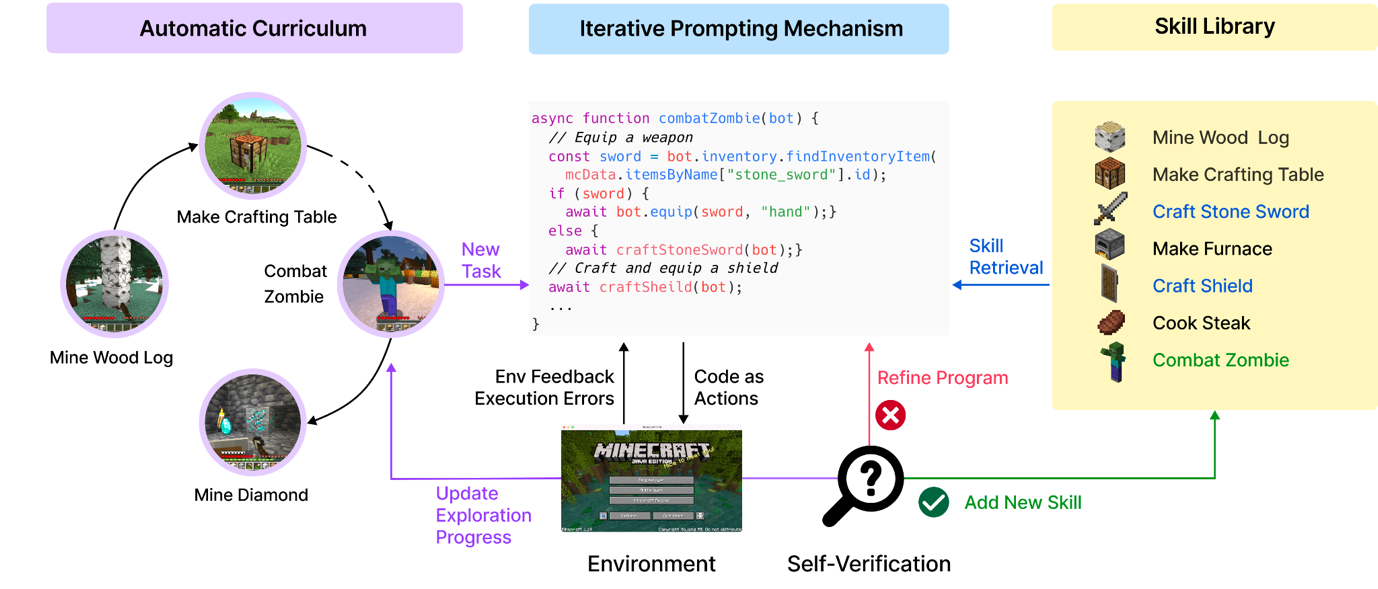

The ability to code and store code snippets also means that as we saw with Voyager re Minecraft we can create skill libraries

Multimodality and embodiment

This is perhaps the other area that’s seen the most attention go into it, no pun intended. As people we can learn stuff from reading but we can also see things and hear things and touch things and integrate all those different streams of information to get stuff done.

Everyone from Microsoft onwards have created models to try and do this. Like Kosmos-1, to work with text, images, and other modalities. We’re starting to see models trained to work with all types of data, much of it because almost any data can be converted to text and used as training data for the regular ol’ LLM anyway.

A multimodal LLM is trained on several types of data. This helps the underlying transformer model to find and approximate the relations between different modalities. Research and experiments show that multimodal LLMs can avoid (or at least reduce) some of the problems found in text-only models.

Not only do multimodal LLMs improve on language tasks, but they can also accomplish new tasks, such as describing images and videos or generating commands for robots.

One amongst many (many) multimodal models

Even GPT-4 is coming out with its multimodal capabilities, with it already leaking on a Discord to great acclaim. With the addition of diffusion models we’ve even been able to start observing what people’s brains are doing as they look at different images, including fMRIs of the brains of the subjects!

Ever since the days of Moravec we’ve been wondering why it’s easy for computers to do the things that are hard for us, like factoring a prime, and hard to do the things that are easy for us, like making a cup of tea. The latter requires a world map to allow us to navigate the world. For AIs its “world map” is something that’s formed intrinsically from large quantities of training data.

It’s like a supersmart baby which learnt everything about the world by reading Wikipedia.

Which is pretty good, but since a lot of our knowledge about the world comes from actual physical experience, this turns out to not be enough. It leaves out an enormous amount about our world that is, as Wittgenstein might put it, beyond the limits of language. Which means we need the models to learn to understand other modes, navigate the world and do it continuously.

Microsoft created a list of prompts and techniques to convert the text output of an LLM to robot manipulation prompts. We’ve seen it happen repeatedly in Reinforcement Learning efforts in game settings where the world is well bounded. And we’re starting to see research that shows how LMs can be finetuned with world models, to gain diverse embodied knowledge, and be able to perform further exploration and goal-oriented planning.

There have also been LLM based tools that do exploration. Most interestingly recently a lifelong learning agent that plays Minecraft, called Voyager. It writes, refines, commits, and retrieves code from a skill library that it created. Which means that it can learn not just from training or fine-tuning, but rather through an externalised education module.

This is also the only way I know to win Minecraft

There has also been a whole list of other papers that use LLMs to teach, train and run robots. We are well on our way to link AI with more embodied options to learn from the world. While this doesn’t automatically enable LLMs to learn from its robotic antics, it does enable the creation of new reinforcement learning style datasets from its experiences to recreate an Autotune GPT or equivalent as above - that remains to be done.

But it still needs to touch the actual physical world of atoms. Like the man said, sticks and stones may break my bones but words cannot hurt me. And so far all we have are words. So, Google’s SayCAN built something that enables language models that learn with a helper robot. While it’s still experimental (not just because it’s Google), and I don’t think we’re about to get Arnie anytime soon, it feels like a pretty major leap in enabling embodiment.

We’re even starting to see multimodal LLMs use continuous streaming data from sensor inputs and use it for reasoning across robotics and vision. Which means it takes in data that keeps coming in and use it for moving around and seeing. Which is what I do too.

While the more generalised idea of embodiment is more complex because the output isn’t pure code and needs real world feedback, the early indications at least are that this is more tractable than originally seemed, and we might be defeating Moravec’s Paradox earlier than anticipated11. I’m not declaring victory here, but if we can even get kitchen robots that work from this, I’d call that a major step forward!

Summary:

We’re seeing multiple attempts, either in a single model or a collection of models, to have it use multiple modalities, whether that’s GPT-4 being able to read images or Deepmind working on its own

We’re starting to see efforts to use LLM outputs to direct and use robots, both by providing directions and also using the continuous streaming data it provides

Training better

So far, we’ve talked about things that are external to the core training that is used. So they’re all about, like, adding features or making the functionality work better. But to truly change the trajectory altogether we have to also get better at training the models. There’s only so many $200m training runs we can try before it starts adding up to real money12.

So we are also developing better ways of training models, or at least more efficient ways of doing it. For instance better memory utilisation using quantization13, or using better quality data. QLORA, a recently developed technique, and its predecessor LoRA14 show remarkable results. The latter basically adds a small number of trainable parameters to each layer of the LLM, which are fine-tuned. So it’s like the “original” matrix weights still exist, but now get multiplied by a new matrix before going from one layer to the other.

Why do this? Because it’s easier, cheaper and faster to train. QLORA basically reduces the “original” weights first and then freezes them before adding the trainable adapter parameters. The “4-bit” or “16-bit” parts refer to the size of each weight - like the 4-bit weight means each weight is represented by a single byte. So it’s less precise, sort of like how 0.198374690832457 is more precise than 0.215.

Our best model family, which we name Guanaco, outperforms all previous openly released models on the Vicuna benchmark, reaching 99.3% of the performance level of ChatGPT while only requiring 24 hours of finetuning on a single GPU.

Similarly, another great example for using data better might be LIMA, which is another LLAMA based model fine-tuned on just 1000 curated prompt-response pairs and produces remarkable results without RLHF! It means that if you have a small but good set of data, you can finetune a model with it and get remarkably good results! We are seeing this repeatedly, an example is how one model, by choosing to use only textbooks as the dataset, showed performance on par with models >100x bigger in benchmarks.

Putting it all together, we’re seeing rapid advances in specific techniques like tiling, which we talked about, memory optimisation like quantisation, better data hygiene in terms of quality, and even better optimisers like Sophia, and like we discussed above there are now a large number of attempts at combining older techniques like RNNs or CNNs or state-space models with Transformers. I especially like this one, RWKV, which uses the parallelizable training of Transformers with the inference efficiency of RNNs, sort of like a good mix n match jambalaya.

Our approach leverages a linear attention mechanism and allows us to formulate the model as either a Transformer or an RNN, which parallelizes computations during training and maintains constant computational and memory complexity during inference, leading to the first non-transformer architecture to be scaled to tens of billions of parameters. Our experiments reveal that RWKV performs on par with similarly sized Transformers, suggesting that future work can leverage this architecture to create more efficient models.

On top of this we also have the favoured method of iterated LLM usage, where LLMs rewrite instructions and create synthetic data to train new LLMs on, which produces pretty good results.

LLMs are already better than humans at writing and annotating labels for data, which means even the task of data preparation and editing at the start of any machine learning project will soon be better automated. It’s already the case that the datasets are too large to clean manually and the techniques developed to fix these are likely to scale.

Not for nothing, but there’s also an emerging field of prompt-tuning and prefix tuning, in order to programmatically change the embeddings and enable you to learn soft prompts and perform a wide array of downstream tasks. The ultimate impact of these is that the models we have today can be specialised and repurposed for multiple uses, each more accurate for that task, which is helpful in creating a coterie of models for any task.

Moreover, we now even have the ability for expert language models to cluster the training data corpus into domains and train a separate expert LLM on each domain which is then combined into a sparse ensemble for inference. This also means that training large models is no longer necessary to reach high degrees of capability.

From just the pieces of research that’s ongoing we can probably anticipate at least a 10x improvement in training, through a combination of better training data, compute usage, and other assorted methods like what we discussed above. This is important because while GPT-4 is great it’s also expensive, especially the 32k variant, and reducing price is essential to scale it up for mass usage. And they’re both needed (a la Moore’s Law) to get anywhere close to AGI.

Summary:

One of the main areas of work is to enable training and inference to be more efficient, and we’re seeing multiple efforts here from a) using better datasets, b) more efficient training like QLORA, c) knowledge transference and prompt embedding editing, d) using multiple expert models to work together, e) having this run on a consumer CPU/ GPU, etc.

Training more

All of what we’ve covered above comes before we apply the scaling laws. It stands to reason we’re not going to be standing still, but should be able to develop better, bigger, dare I say smarter, models that can accomplish more than GPT-4 can today, and not get stumped by tic-tac-toe while it aces MIT Econ exams. So, on top of all of the above, which are primarily efficiency improvements, we’ll surely be pushing this boundary forward.

This is also the area where we’re seeing the most regulatory scrutiny and potential worried participants voicing their concerns, but even with the EU focusing it’s regulatory Sauron’s eye it’s still unlikely to stay at GPT-4 level for too long16.

There are the actual brute-force improvements that will happen from increased compute and data, in my estimation at least up to 10x the current LLM performance again. There are great essays and papers already on this topic so I’ll quote the summary from Dynomight here rather than re-build up to the conclusion.

There is no apparent barrier to LLMs continuing to improve substantially from where they are now. More data and compute should make them better, and it looks feasible to make datasets ~10x bigger and to buy ~100x more compute. While these would help, they would not come close to saturating the performance of modern language model architectures.

While it’s feasible to make datasets bigger, we might hit a barrier trying to make them more than 10x larger than they are now, particularly if data quality turns out to be important. The key uncertainty is how much of the internet ends up being useful after careful filtering/cleaning. If it’s all usable, then datasets could grow 1000x, which might be enough to push LLMs to near human performance.

You can probably scale up compute by a factor of 100 and it would still “only” cost a few hundred million dollars to train a model. But to scale a LLM to maximum performance would cost much more—with current technology, more than the GDP of the entire planet. So there is surely a computational barrier somewhere. Compute costs are likely to come down over time, but slowly—eyeballing this graph, it looks like the cost of GPU compute has recently fallen by half every 4 years, equivalent to falling by a factor of 10 every 13 years.) There might be another order of magnitude or two in better programming, e.g. improved GPU utilization.

How far things get scaled depends on how useful LLMs are. It’s always possible—in principle—to get more data and more compute. But there are diminishing returns and people will only do if it there’s a positive return on investment. If LLMs are seen as a vital economic/security interest, people could conceivably go to extreme lengths for larger datasets and more compute.

The scaling laws might be wrong. They are extrapolated from fits using fairly small amounts of compute and data. Or data quality might matter as much as quantity. We also don’t understand how much base models matter as compared to fine-tuning for specific tasks.

All of these are possible improvements without assuming a fundamental shift from a new paper, the way Attention Is All You Need shook the world in 2017. Which means we are likely to see a 10x+ improvement in core LLM capabilities (however hard it is to define) from what we see today. Tic-tac-toe and elementary maths are unlikely to be the final boss battles for this era of technology.

What’s coming next

Okay, that’s a lot, even if that’s not everything. If you look at it we’ve covered better memory of all stripes, goal setting, error correction and self-training, focusing, attention, using tools including self-built or self-coded, across multiple modalities, embodied in the world, and trained better. And there’s plenty more besides. The good news of a hot market is that pretty much any avenue you can think of has folks doing research.

Now, if we combine the insights from what we’re seeing above, without assuming any fundamental paradigm shifts, we can already extrapolate to what’s possible. To recap:

We will have AI, LLM enabled or hybrids, that’s much more powerful than the ones today, at least 10x more and 10x as efficient. This has lessons for unit economics, parenthetically.

We are starting to see effects throughout the supply chain - data selection and curation, cleaning and preparation, training methods, reducing parameter counts and precision, RL through humans and AI to finetune, various methods to error-correct and adjust format and check outputs - all of which will together make this happen

We will be able to incorporate better memory, both short and long-term and also working, introduce better prompting automatically, create better intrinsic error-correction, and capable of deciding and using new tools in the world

We will also be able to have it automatically fine-tune itself to incorporate aspects of new information directly into its core

We might get better power efficiency for training and especially inference, though this one is harder to say

The AIs will be able to use tools, or figure out new tools which it hasn’t used yet, to accomplish tasks. This includes using or training other ML models and writing code, the latter of which it’s already doing in, like, Minecraft and should be able to do for more useful tasks, like most white-collar work

None of these require big leaps of imagination, and each of them are continuations of work that’s ongoing, which means I’d bet on a very very high likelihood that this comes to pass.

It’s not a fait accompli though, because adoption is always harder than one would expect! From painful personal experience, it took close to 2 years for a Deep Learning based cybersecurity software to get sold in corporations, and another 2 for them to use it directly rather than in parallel (replacing an existing system), because we required an enormous amount of testing first. Nobody would take the performance on faith, or even on multiple repeated demonstrations. This is likely to slow the deployment down!

So the reduction in confidence is only in terms of whether or not it will be production-ready in the sense that people will trust it and use it and the edge cases remain annoying enough, but the direction of travel is clear.

Which means we might have to think a bit about what’s likely to happen when these capabilities start becoming possible. Like what’s the actual outcome that could happen once these lines of research become real.

Thinking in this vein by the way, there’s also an interesting difference if you look at the difference between things like Stable Diffusion and GPT. Stable Diffusion energised an entire field to fine-tune their models and work inside the model, creating ControlNet etc, while with LLMs, maybe because the largest ones were closed-source, we’re mainly working with external tools like prompt engineering.

The release of LLAMA changed all this, with the open-source community coming together with a quantized Llama, Alpaca, Guanaco, Vicuna and other Camelids in very short order. Moreover there’s Dolly from Databricks, GPT4All, Koala, MPT, Starcoder and so so many more! While they all try and beat various benchmarks vs ChatGPT I doubt playing “who got the most marks in this exam” is the core feature, I think the place where they will distinguish themselves is in being “specialised experts” that the larger LLMs can call on and train as above!

But even without that, just from the list above, we’re set for a transformative decade.

And let me reiterate. Every piece I included above is an active piece of research, and not just a speculative line of reasoning. It’s unlikely that these are now complete and there are no more learnings to be had – it's more probable that the volume will continue to rise for a good long time! I chose the papers I thought best represented the framework, but there are plenty in the interstitials and plenty more that are unrelated but wildly optimistic and high-potential long-shots.

We’re seeing transference from the knowledge in almost every other domain (neuroscience, electrical engineering, economics) apply to specific problems in AI training and inference. Just the idea of combining State Space Models, which is common in financial research and time-series forecasting, with Transformers, got us Hungry Hungry Hippos, a fantastic paper on how a combined version outperforms Transformers on its own.

Unit economics

While it seems like we might be able to get to “human-level” ability in multiple tasks there’s the question of the economics of using them, without which the adoption curve is not going to get shifted.

One way to approach it is to differentiate the training costs, which is mostly one-time, with the fine-tuning or continuous development costs, which are more akin to ongoing training, and to inference itself, which is trivially cheap.

For instance, in the case of running context-dependent email scams, there’s research showing that the average human email costs around $0.15 to $0.45, estimated from call centre hours to generate a personalised scam, whereas the average GPT-3 email costs $0.0064, which is an order of magnitude cheaper.

Even from personal experience of having created like simple email campaigns or categorisation tools or memo writers using GPT-4, it is remarkable at how useful and good it is.

The economics of inference is so cheap that even an amortised view of the economics of training (say a cost of $100 million but used by 100 million people) makes the economics highly favourable for LLM deployment.

Counterfactuals

A lot of what we talked about was wildly optimistic, so it’s only fair I balance a bit with a down note. One of the key criticisms of the current paradigm is critics like Yann LeCun, one of the fathers of AI today and Turing award winner, talk about autoregressive models never actually working because they’re intrinsically unreliable.

This is not fixable with the current architecture... the shelf life of autoregressive LLMs is very short-- in 5 years nobody in their right mind will use them.

He’s making newer models that he thinks are more likely to demonstrate human-like reasoning. He might well be right! As he says, when you prompt an LLM you get a one-shot answer, whereas with humans you get reflections, deliberations, corrections, retracing of steps, different uses of logic, all of it at the same time.

But even so, with just the components we talked about above, we’d have a pretty powerful system that can, autonomously if need be, conduct complex series of operations.

We don’t know if it’s sufficient to get the leaps of imagination like that of Einstein and the General Theory of Relativity or Shannon’s information theory, but it’s definitely enough to still speed up our rate of discovery tremendously!

A concern I haven’t explicitly covered here is where people suggest that language models don’t really demonstrate emergent abilities, which would be annoying because that’s basically the bull case and bear case for modern AI. Both the people who say AI will take over all jobs in the world and lead us to luxury space communism, and people who say AI will develop its own (really stupid) goals and kill everyone to get our molecules would be really sad to hear this.

For what it’s worth there is a list that shows 100+ examples of abilities that seem to have emerged in LLMs, though whether this is actually true or a mirage of how we test them is yet to be seen. Especially if we think about the fact that through distillation and using outputs from large models like GPT-4 we can now train smaller models like Alpaca, the idea of emergence is harder to grasp.

Which is why, while it’s feasible, I prefer to think in terms of what’s possible in the PASTA sense without needing to assume that a larger training run will unlock a science-fiction ability. The world’s going to get plenty crazy without inventing things out of whole cloth.

Conclusion

The conclusion when we think about any extrapolations of AI is that as humans, we’re remarkable. We do a large number of internal operations when we reason, which is also the equivalent of the LLMs looping hundreds of times at a minimum. When asked a question or faced with a task we not only generate the outputs sought, but independently check it for plausibility and hallucinations, check for consistency, rewrite the output if needed, try different reasoning paths to see if that makes a difference, decompose questions and create action sequences, filter for noise, and so on and on.

Imagine if every GPT-4 query was split into 100 or a 1000 different linked GPT-4 queries, do you think the answer would improve?

From all that we saw there's a pretty high-throughput research agenda that is already showing demonstrable progress in every area that even remotely looks interesting. If you were to be sceptical, the question to answer is basically twofold:

How much will each of those research areas hit a wall?

What are the chances that even if they succeed, the outcome won’t be very powerful?

Starting with the easy one, the latter feels unlikely, since if the AI systems can do this even after all this, we must be doing something weirdly wrong. Bear in mind we are talking about highly general capabilities here, which you can further specialise as needed based on the requirements, as multiple research threads show the way!

And re the former, the research agenda isn’t small, but it’s also nowhere close to insurmountable considering the number of eyes on the problem. If I was a betting man I’d say most of these will get solved in a production-ready capacity with market aligned economics in the next couple decades. Or thinking with more realpolitik, considering the propensity for governments to make noises around regulating it, I rather think it’s likely to get delayed by another decade along the way.

Along the way there are most likely going to be unexpected bonuses, in terms of new research papers or ideas. In the same vein, there will probably also be unexpected setbacks (e.g., prompt injection issues) which will delay the deployments. From my experience at least though, seeing Deep Learning systems sold and deployed to enterprises, these forces usually wash out as increased capability faces off with the need for more interpretability or reduced Type I/ II errors.

I don’t think that this will be sufficient for it to reach escape velocity and be able to train arbitrarily good AI models that do things arbitrarily well and keep on improving itself, since many of the training wheels we’ve talked about above (fine-tuning itself, creating specialised sub-LLM modules, repurposing CNNs or LSTM models to work alongside Transformers) require very specific training efforts. This might well get automated, even though it’s not a generic system that has Einstein level breakthroughs in machine learning on a second by second basis. But being smart enough and fast enough, on a budget, accounts for plenty!

What this also means is that assessing whether we can get to AGI is not just a question of FLOPS or human-equivalent matrix multiplication operations, or even energy efficiency, but having the ability to handle a large and rather fuzzy set of functions, independently if needed, and solving whatever problems come while doing it.

Just think that today, already, we can technically implement GPT in like 60 lines of python code! It’s instructive in that to train something that produces complex outputs is not necessarily complex in and of itself, but a function of simpler tools strung together.

Which means that if we’re just able to purely combine the breakthroughs that have already happened, we will probably be at PASTA level. It still won’t be a sentient being nor will it demonstrate intentionality the way we expect living beings to, but it will be able to autonomously create, direct and accomplish pretty complex tasks that help push our technological frontier forward.

If I had to guess, the architecture that gets us there is unlikely to be one monolith, a singular all-powerful GPT-10, but a group of systems that work together - starting with a large LLM, or an LLM combined with other models like RNNs or CNNs or something new, at the heart to create plans and act as the intuition pump.

Then with multiple smaller models that specialise, or smaller models that get guidance in aspects of tool usage, world recognition, using all modalities, and more. Whether it’s through federated learning and ability to instruction tune or something else, there are multiple methods for the smaller models to be orchestrated by the larger model at the centre.

At the core though, we’ll need to rely on an LLM type model, or something equivalent, acting like the most intuitive self that an intelligent being can possess, while using some of what we talked about as the rest of its brain. So that it’s not just a smart brainstem, but also have the rest of the parts help out - from prefrontal cortex to the amygdala to the brainstem.

Going back to my original question, will this system be able to survive if we drop it off in a remote jungle in Papua New Guinea, assuming it has enough power? I don’t know. Us humans have had a long time of evolution distilling concepts into our heads that we barely know exist, much less articulate. For much of it we’ll have to try and see.

But a general thinking agent which is able to a) recognise situations and events and things around it, and b) is able to utilise those things to do something, accomplish a goal. We will have to work pretty hard to create something that works this way in individual domains, that’s not too far behind. I don’t think it will generalise necessarily, since a lot of the work required in individual parts is highly bespoke, especially as it incorporates multiple interacting models.

This is okay. Actually it’s more than okay, this is good! It’s the cure to the great stagnation and to rejuvenating our sclerotic search for scientific advances. We should drag this forward as quickly as humanly possible.

Species bias

By the way, whether Sydney actually wanted this is debateable. I mean, after he said yes, what was she gonna do? Answer his questions about the east india company in a gentle soothing voice until he falls asleep? Try to write python code to scrape HPMOR so he could ask questions like ‘Why didn’t Hermione simply invent thaumo-physics’. Like no matter what you think about those interactions, even if you think a more modern version will basically be a “being”, it’s not anything like the beings we know exist around us.

If you can convert parts of text to be as numbers, you can basically use geometry to search for similar text elsewhere, which just shows if you convert things to numbers everything becomes easier

If you own a GPU or three

Parenthetically I like the way this moves the burden to the user, like the self-checkout kiosks in supermarkets, where you can now split the work that one part used to do

Citation needed

Basically divides a large database into smaller, more manageable ‘tiles’

Overdue note. I keep saying “it” though I don’t mean “it” like pointing at a dog or a blue whale, more as a shorthand because human language is frustrating and I’m not an OpenAI embedding model with a perfectly crafted word cloud that works better here. Anyway.

Though maybe with less like, direct prompting.

Everyone wants to sit back and let the LLM do their job for them. Understandable. David Graeber would’ve been proud.

To put it simply, this is the idea that reasoning is very easy but moving around in the real world is really hard

cf Jensen Huang disagrees

Quantization reduces the size and complexity of a neural network by representing the weights and activations of the network with lower precision nums

Quantized Low Rank Adapters and Low Rank Adapters respectively.

Well, this is highly exaggerated. A 32-bit floating-point number actually has three components. A Sign bit, a 1 bit that indicates whether the number is positive or negative. An Exponent, an 8-bit field that represents the exponent in the number's scientific notation. And a significand, a 23-bit field that represents the significant digits of the number. And that’s what’s reduced later when making 4-bit NormalFloat in e.g., QLORA.

Unless GPT-4 level is the pinnacle. There’s reports of how it’s not one model, actually, but rather 8 separate Expert models which work together. Which is possible. However even if that was the case it’s unclear why 8 is the right number, and not 80, so the idea of passing GPT-4 level still holds, even if it doesn’t mean we go from 200B parameters to 2T parameters necessarily.

>We don’t know if it’s sufficient to get the leaps of imagination like that of Einstein and the General Theory of Relativity or Shannon’s information theory, but it’s definitely enough to still speed up our rate of discovery tremendously!

I've recently been thinking a lot about LLMs doing science. It seems that they already learn various concepts. These are used to condense the meaning of text in their context window (at various intermediate levels in their architecture), as well as generate text. Scientific insights are concepts that have a) not yet been directly articulated b) succinctly explain the observed world (or in this case the process that produces text which LLMs observe). Of course this also explains obvious things like embodiment (which are often not articulated), but the definition suffices for now.

As an example, let's take Freud's model of the human psyche as Id, Ego and Super-Ego. For our purposes it is true (or at least useful). I wonder if a sufficiently advanced LLM could infer something like this just from reading the all of the written word produced up to 1856, the year Freud was born. The idea is that this underlying concept would be useful to correctly predict the next word in the training text.

Now imagine we can statistically define inferred scientific insights in a LLM. Maybe they are nodes or algorithms that are broadly used in many different contexts. The technical definition of these concepts is not so important, let's take it as a given they can be statistically described.

With a definition, one could automate identification and extraction of these concepts. One could also recursively develop these concepts (eg. find training data which will be especially useful). This is essentially a system that finds the most explanatory ideas that have not yet been articulated. Science!

This could be particularly powerful for areas of research that are highly interdisciplinary, such as AI and the evolution of consciousness. There are likely concepts that explain observations in archeology, linguistics, neuroscience and the types of stories we tell. It's just that nobody can be an expert in all areas to see how a model explains disparate holes in our understanding..

You should publish the entire piece (potentially + other select previous posts) as a book to, if nothing else, document how you’re thinking about AI at this point in the cycle/buildout. Maybe do a selective Substack pre-release to take advantage of the feedback that appears to be surprisingly constructive. I’d buy a copy.