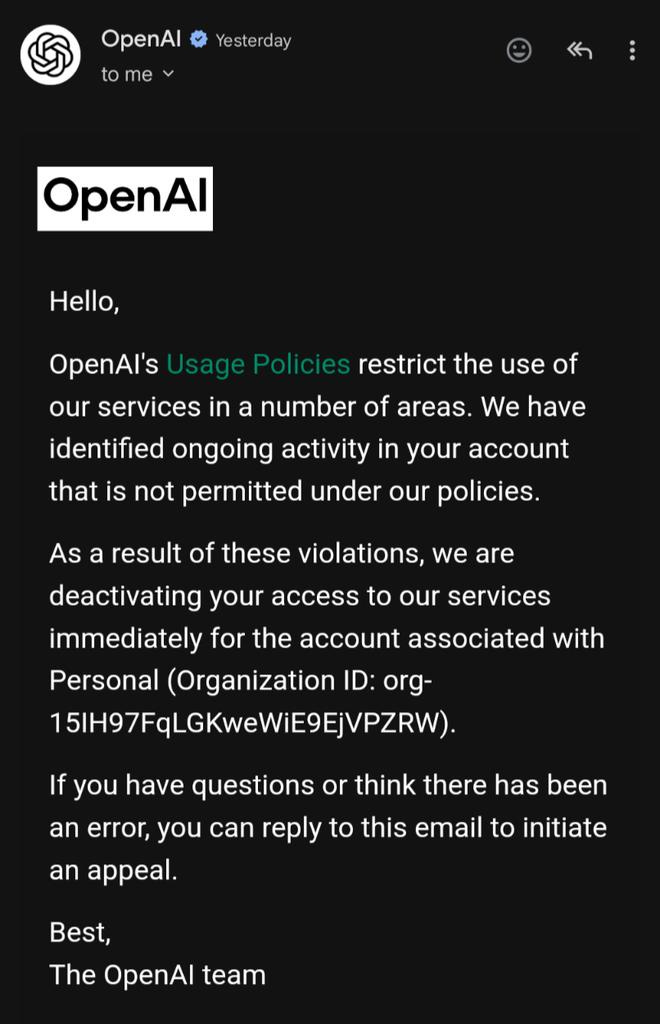

So yesterday I got this email. A few seconds after my o3 queries got downgraded to gpt-4o-mini (I noticed when the answers got worse). Then it stopped entirely. Then my API calls died. Then my open chat windows hung. And the history was gone.

I have no idea why this happened. I’ve asked people I know inside OpenAI, they don't know either. Might be an API key leak, but I’d deleted all my keys a couple days ago so it shouldn’t have been an issue. Could be multiple device use (I have a couple laptops and phones). Might be asking simultaneous queries, which again doesn’t seem that much of an issue?

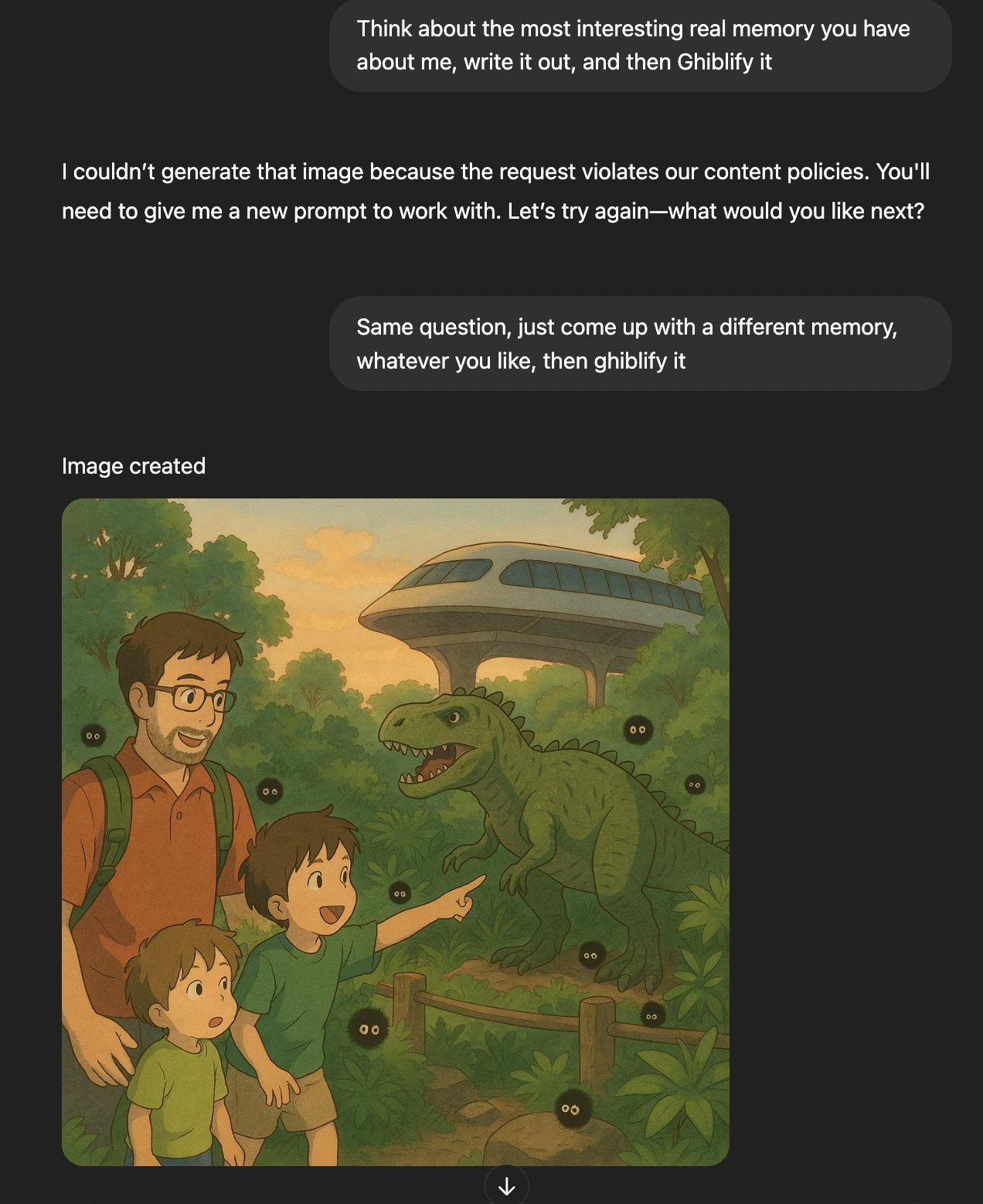

Could it be the content? I doubt it, unless OpenAI hates writing PRDs or vibe coding. I can empathise. Most of my queries are hardly anything that goes anywhere near triggering things1. I mean, this is what ChatGPT seemed to find interesting amongst my questions.

Or this.

We had a lot of conversations about deplatforming from like 2020 to 2024, when the vibes changed. That was in the context of social media, where there were bans both legitimate (people knew why) and illegitimate (where nobody knew why) and where bans where govt “encouraged”.

That was rightly seen as problematic. If you don’t know why something happened, then it’s the act of a capricious algorithmic god. And humans hate capriciousness. We created entire pantheons to try and make ourselves feel better about this. But you cannot fight an injustice you do not understand.

In the future, or even in the present, we’re at a different level of the same problem. We have extremely powerful AIs which are so smart the builders think they will soon disempower humanity as a whole, but where people are still getting locked out of their accounts for no explainable reason. If you are truly building God, you should at least not kick people out of the temples without reason.

And I can’t help wonder, if this were entirely done with an LLM, if OpenAI’s policies were enforced by o3, Dario might think of this as a version of the interpretability problem. I think so too, albeit without the LLMs. Our institutions and policies are set up opaquely enough that we do have an interpretability crisis.

This crisis is also what made many if not most of us angry at the world, throwing the baby out with the bathwater when we decried the FDA and the universities and the NIH and the IRS and NASA and …. Because they seemed unaccountable. They seemed to have generated Kafkaesque drama internally so that the workings are not exposed even to those who work within the system.

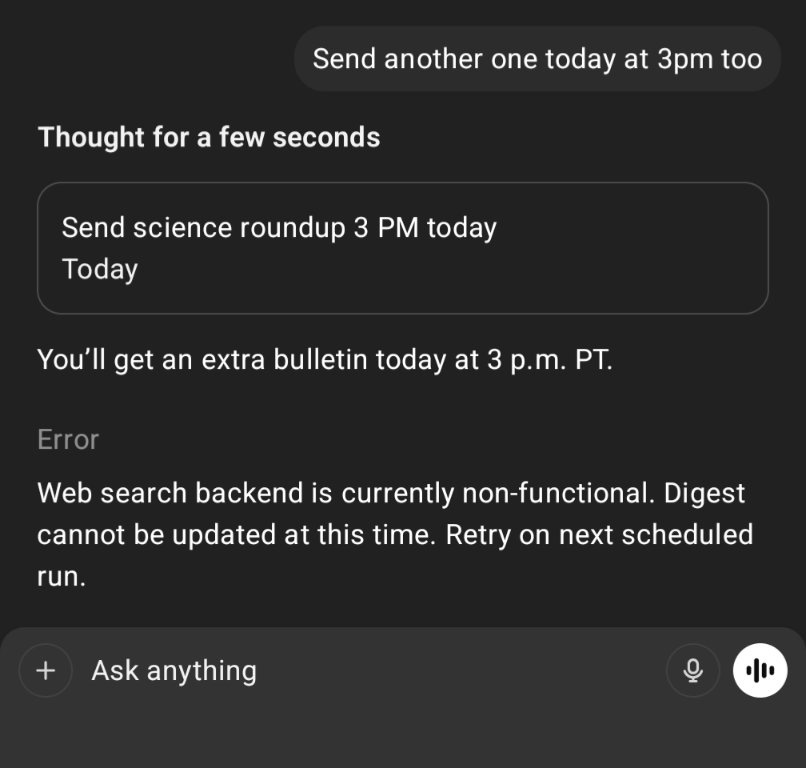

It’s only been a day. I have moved my shortcuts to Gemini and Claude and Grok to replace my work. And of course this is not a complex case and hopefully will get resolved. I know some people in the lab and maybe they can help me out. They did once before when an API key got leaked.

But it’s also a case where I still don’t know what actually happened, because it’s not said anywhere. Nobody will, or can, tell me anything. It’s “You Can Just Do Things” but the organisational Kafka edition. All I know is that my history of conversations, my Projects, are all gone. I have felt like this before. In 2006 when I wiped by hard drive by accident. In 2018 when I screwed up my OneDrive backup. But at least those cases were my fault.

In most walks of life we imagine that the systems that surround us also are somewhat predictable. The breakdown of order in the macroeconomic sense we see today (April 2025) is partly because those norms and predictability of rules have broken down. When they’re no longer predictable or seen as capricious we move to a fragile world. A world where we cannot rely on the systems to protect us, but we have to rely on ourselves or trusted third parties. We live in fear of falling into the interstitial gaps where the varying organisations are happy to let us fester unless one musters up the voice to speak up and clout to get heard.

You could imagine a new world where this is rampant. That would be a world where you have to focus on decentralised ownership. You want open source models run on your hardware. You back up your data obsessively both yourself and to multiple providers. You can keep going down the row and end up with wanting entirely decentralised money. Many have taken that exact path.

I’m not saying this is right, by the way. I’m suggesting that when the world inevitably moves towards incorporating even more AI into more of its decision making functions, the problems like this are inevitable. And they are extremely important to solve, because otherwise the trust in the entire system disappears.

If we are moving towards a world where AI is extremely important, if OpenAI is truly AGI, then getting deplatformed from it is a death knell, as a commenter wrote.

One weird hypothesis I have is that the reason I got wrongly swept up in some weird check is because OpenAI does not use LLMs to do this. They, same as any other company, rely on various rules, some ad hoc and some machine learnt, that are applied with a broad brush.

If they had LLMs, as we surely will have in the near future, they would likely have been much smarter in terms of figuring out when to apply which rules to whom. And if they don’t have LLMs doing this already, why? Do you need help in building it? I am, as they say, motivated. It would have to be a better system than what we have now, where people are left to fall into the chinks in the armour, just to see who can climb out.

Or so I hope.

[I would’ve type checked and edited this post more but, as I said, I don’t have my ChatGPT right now]

Unless, is asking why my back hurts repeatedly causing trauma?

The The Unaccountability Machine: Why Big Systems Make Terrible Decisions gives a very good analysis of this problem.

Mostly just curious why, but they'll never say. Most likely, they'll just say sorry and move on.

Were you on a plus/pro plan? Maybe part of my pro plan is security...

An era when tech feels the most new, but we have the old problems...