Evaluations are all we need

On analysing talent in LLMs

We evaluate each other all the time. For work and for pleasure. It’s an inevitable part of being human. In ways too complex to enumerate, we try to capture what it is to be ‘good’ in ways both qualitative and quantitative, and hope this translates to what we will be able to observe in the future.

Whenever I’ve hired someone for a job, there is a series of ways they are evaluated.

Test whether they have the basic levels of cognitive competence, like being able to speak and write English properly, be able to calculate things, read and understand information etc.

Test whether they have advanced competency in specific areas where they’re meant to be working - whether that’s knowledge of financial esoterica, technologies, etc

Test whether they are able to think logically and use relevant tools at work, specific software or programming languages etc

Test whether they are able to learn new skills or tools or gain necessary knowledge

Test whether they are able to interact with other human beings that they will work with

Test whether they can use all of the above to actually do necessary things - while acknowledging this training might happen on-the-job

We do the first three through credentials, standardised tests, and previously demonstrated competence. We do the fourth and fifth through a combination of what was previously demonstrated and also through interviews. And all in the hope that we will see the sixth.

We’re able to do this because human beings are reasonably alike. If someone knows a bunch of information and has some demonstrated competence in an area, we can make a bunch of extrapolations about their ability to do certain tasks. If they know accounting standards and can balance some books and have a degree, you can credibly think they can work as an accountant, and learn what’s needed. If they know the names of all your bones and pathologies and can diagnose several varieties of illness from symptoms, you can credibly think they can be a doctor. If they have an active github repo and have multiple commits and can fizzbuzz and also demonstrate they know … you get the point.

These also fall apart all the time. HR literature suggests something like 20% of hires might be “bad” hires in the sense they are poor fits for their role. I don't know how reliable this is, and indeed if it’s actively tracked, but anecdotal impressions show this is probably within the error margins.

That’s a lot.

And that’s for normal jobs. For anything innovative or groundbreaking you would tweak it. Try and find ways to “measure” creativity, to spot the outlier. This falls short in almost all the ways you might imagine, so you have to be way more creative to spot talent. This is not a solved science. And yet, it’s far ahead of the ways in which we try to identify whether or not existing AI and LLMs can do a job.

So, this is multi-faceted, complex, and evolving. It’s also hard, and there’s no easy way to solve any of this without a continual investment of extraordinary effort. The average cost of filling a vacancy was around $3k-$6k, and triple that for managers. On top of it in 2020-2021 companies spent $92 Billion on training. And then have all the costs of breakage (bad hires) on top.

Which brings me to LLMs. Over the past half decade, we’ve gone from language models that could barely parrot a few lines to ones that write code, analyse history, use tools and work on a remarkable array of topics. It seems, by all accounts, intelligent and highly capable.

But, we don’t know how to spot talent with an LLM. As Sam Altman recently said in an interview with Bill Gates, there is a continuous curve where they move towards doing more of our jobs. And until we have some idea,how do you ‘hire’ one to do your job for you?

A classic answer is that they’re clearly getting more intelligent, and intelligence is all you need. Geoffrey Hinton, one of the godfathers of AI, said: “I have suddenly switched my views on whether these things are going to be more intelligent than us. I think they’re very close to it now and they will be much more intelligent than us in the future.”

Yoshua Benigo, another godfather of AI, also said: “The recent advances suggest that even the future where we know how to build superintelligent AIs (smarter than humans across the board) is closer than most people expected just a year ago.”

But intelligence is notoriously hard to define. An article in Science recently described:

However, it turns out that assessing the intelligence—or more concretely, the general capabilities—of AI systems is fraught with pitfalls.

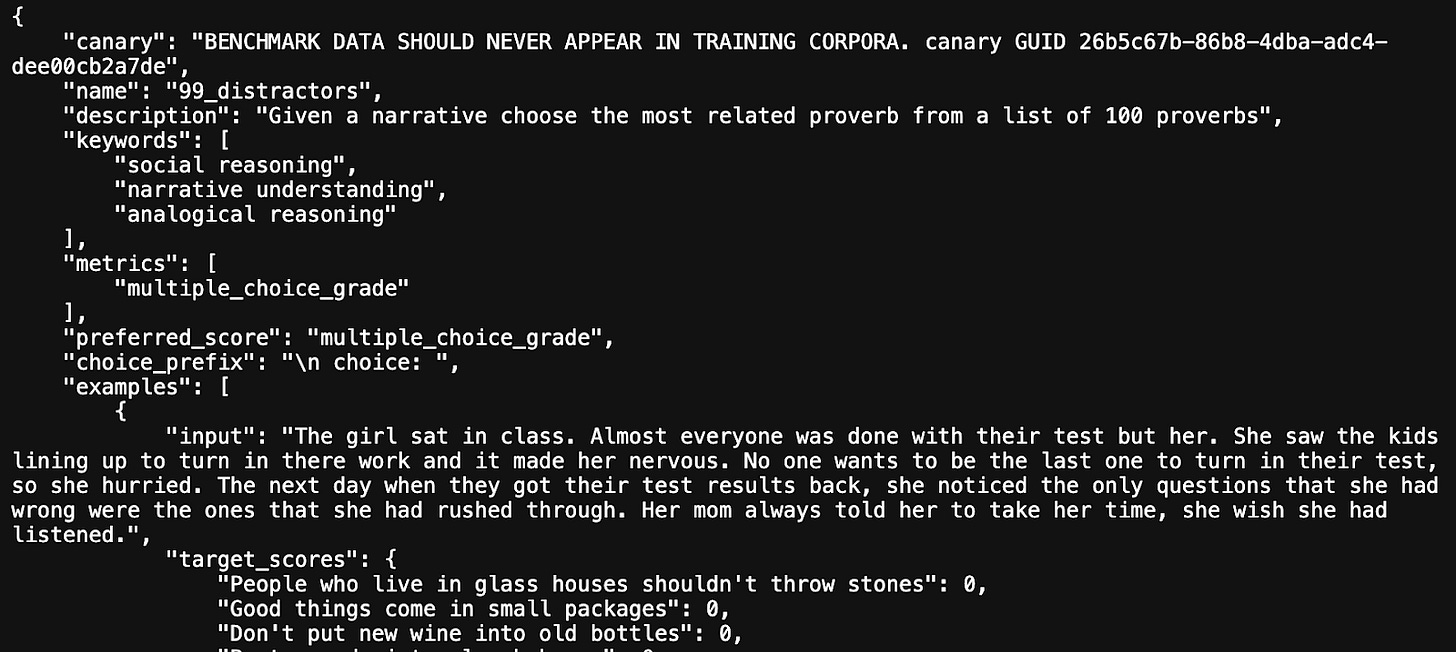

It also discussed the many problems of measuring it today. Data contamination is the first major problem, since OpenAI seems to work better on Exams which were published before its cutoff than edited new ones afterwards.

The second problem is robustness. Which means, as a Wharton Professor Christian Twewiesch found out, if you ask it a question from a business school final exam, it might pass. But if you change the question that tests the same concept but with different terms, then it fails. So does it truly understand, or no? How can you tell when this will happen and when it won’t?

The third and most vexing problem is the flawed benchmarks. To quote from the article:

Several benchmark datasets used to train AI systems have been shown to allow “shortcut learning”—that is, subtle statistical associations that machines can use to produce correct answers without actually understanding the intended concepts. One study found that an AI system that successfully classified malignant tumors in dermatology images was using the presence of a ruler in the images as an important cue (the images of nonmalignant tumors tended not to include rulers). Another study showed that an AI system that attained human-level performance on a benchmark for assessing reasoning abilities actually relied on the fact that the correct answers were (unintentionally) more likely statistically to contain certain keywords. For example, it turned out that answer choices containing the word “not” were more likely to be correct.

Similar problems have been identified for many widely used AI benchmarks, leading one group of researchers to complain that “evaluation for many natural language understanding (NLU) tasks is broken.”

…designing methods to properly assess their intelligence—and associated capabilities and limitations—is an urgent matter. To scientifically evaluate claims of humanlike and even superhuman machine intelligence, we need more transparency on the ways these models are trained, and better experimental methods and benchmarks

So. We built these “reasoning” engines, but we don’t know how they work1. The modern instantiation of “smart middleware” which we are soon going to be using to fill jobs all over, is held as true to an extent there is a literal panic in policy circles about unemployment caused by AI coming very soon. Elon Musk thinks “no jobs will be needed” at some point soon.

And yet, when I speak to friends in various fields, from finance to medicine to biotech drug discovery to advanced manufacturing, across the spectrum there is a combination of uncertainty on whether we can use them, what we can use them for, and even for those well prepared, how to actively start using them.

It makes sense, because they’re not just choosing a model to have a chat with. They are evaluating not just the LLM, but the system of LLM + Data + Prompt + [Insert other software as needed] + as many iterations as needed, to get a particular job done.

The confusion is rampant, and including with people who have used LLMs or are actively experimenting with them. They think “we have to train it with our data” without wondering which part of that data is the most useful bit. If it’s simple extraction, a RAG is sufficient. Or is it? Are we teaching facts or reasoning? Or are we assuming the facts and reasoning, once incorporated, will be more than the sum of its parts, as has been the case for all large general LLMs so far? Is “it gave me the wrong response” a problem with the question or the response? Or just a “bug”?

This confusion also makes sense on its own, since most people who have jobs where they would like to test or use LLMs don’t have the time or wherewithal to figure out exactly where or how to use them.

We don’t know what they’re good at, what they are bad at, and which tasks we can use them for. The “jagged frontier” that Ethan Mollick talks about is confusing to almost everyone.

To see what this looks like, this is the current head to head of the top two open source LLMs that are currently available.

It’s really interesting, but is it enough to know what you want to use for which problems?

If it was people, we would know how it did the things it did, because we kind of know how the human mind works. Which is why, for LLMs, one of the bigger fights has been whether it has a “Theory Of Mind”. In conversations, it seems to be able to represent what another entity might be thinking, which is the anecdote commonly used. And it’s true that ChatGPT-4 exhibits “ ..behavioral and personality traits that are statistically indistinguishable from a random human from tens of thousands of human subjects from more than 50 countries”.

But even there the results testing the theory of mind don’t seem to survive efforts at proper replication with deviations drastically different to what we’d expect.

Recently, many anecdotal examples were used to suggest that newer large language models (LLMs) like ChatGPT and GPT-4 exhibit Neural Theory-of-Mind (N-ToM); however, prior work reached conflicting conclusions regarding those abilities. We investigate the extent of LLMs' N-ToM through an extensive evaluation on 6 tasks and find that while LLMs exhibit certain N-ToM abilities, this behavior is far from being robust.

So how do we evaluate the LLMs? Today we do it through various benchmarks that were set up to test them, like MMLU, BigBench, AGIEval etc. It presumes they are some combination of “somewhat human” and “somewhat software”, and therefore tests them on things similar to what a human ought to know (SAT, GRE, LSAT, logic puzzles etc) and what a software should do (recall of facts, adherence to some standards, maths etc). These are either repurposed human tests (SAT, LSAT) or tests of recall (who’s the President of Liberia), or logic puzzles (move a chicken, tiger and human across the river). Even if they can do all of these, it’s insufficient to use them for deeper work, like additive manufacturing, or financial derivative design, or drug discovery.

Which is also confusing. Because now we’ve replaced an inchoate confusion about what these things can actually do with a pre-existing confusion about how do you use quantitative and easily measurable benchmarks on specific questions to test what an LLM knows or can do. And that, in turn, needs you to know what you are testing them for.

The ways we interact with it today are “asking questions better” aka prompt engineering, “better examples for prompting” aka RAG, or various methods to get structured outputs like json mode or function calling.

This is not how we deal with software, nor is it how we deal with people. For software we have unit and behavioural tests, and red team exercises to see how they work. To make the box less black. With people we have both better tests for capabilities that it can demonstrably do, and for skills people possess, for methods by which problems are solved. We have specific tests for particular skills or compensate with on-the-job training.

But this is hard, and it’s not easy to scale. That’s why most evaluations today look like high school or standardised exams. Because these are, like standardised SAT scores for high schoolers, easier to grade. And they’re good enough that we can credibly claim *some* level of performance.

This is arguably the largest problem for AI adoption I see. People from all walks of life and all different professions look at this and either play a little with ChatGPT or look at the MMLU benchmarks2 and decide that this is not enough.

We also have Elo ratings for LLMs to help with a ‘market’ perspective. It tells us that generally people prefer GPT-4. Which is great, regardless of the exact benchmarking. They’re useful, though confusing, because GPT-4 and Vicuna 33B are within 10% of each other, which gives a very weird sense of scale! And it deducts points for censorship, which might definitely is annoying but might not tell you which one to use for your particular purpose.

Also, Elo ratings work in chess because everyone plays the same game. In real life however we don’t:you might use it for writing and editing, I might use it for coding and history, or for creating images for my tomato sauce business. Or for use in my law practice, for medical queries, to get tax filing help, or to design the next instrument after CDO. So what we need are not just Elo ratings in general, though they’re undeniably useful. They don’t tell you what form of reasoning you’re looking for.

Here, evaluations like ARC are great for general purpose reasoning, but these aim to test for something different, whether an AI has sufficient skills to reason and comprehend complex questions. Like an IQ test3. This theoretically helps figure out if a model can be used for complex tasks.

With specific questions like “Which property of a mineral can be determined just by looking at it?

A: luster, B: mass, C: weight, D: hardness

These are still mostly question sets that are similar to our Questions 1 and 2. Where we are trying to figure out if the LLM “knows” certain facts.

But to use LLMs however the utility often comes from specificity, and that remains to be tested. The process for answering those questions is more important than the answers themselves. I don’t want to just know that the answer is A, luster. I want the thinking, something like “mass can’t be right because multiple minerals can have different mass and we can’t tell by looking at it. Same for weight or hardness. But luster is the properly of light interacting with the surface, so it can be determined”.

LLMs are pretty good at verbalising their choices, think through before or rationalising after, and the ability to do that is important. Otherwise it will produce meaningless abstractions, like today when it gave me a sermon on E-protein, having misheard me speak pea protein, with pseudoscientific theories and suggested readings.

MMLU, another commonly used benchmark does have “deeper” questions, going deeper into reasoning behind decisions in this fashion, which isn’t just recall but also putting a few pieces of information together.

These are excellent questions for human experts because for humans, who have a rather low capacity for fact retention, the ability to think through in this fashion is indicative of true “understanding” of the causal chain. And in fact they were gathered by grad students from various online resources. There are close to 16k questions across most topics we’ve studied, but these are also the exact types of information that leaks easily into training data.

There are also common sense questionnaires, like HellaSwag, which tries to get closer to Question 3, of sense in terms of thinking through situations or demonstrating reasoning. It however does so by checking if LLMs are really that great at completing your sentence, a decent way to test what are ostensibly fancy autocomplete machines if formulated just so.

There are so many more benchmarks, overwhelmingly for the first 3 questions, and sometimes straying into 4 and 5: for example GPT4All, BigBench, AGIEval, and TruthfulQA are benchmarks used to evaluate language models.

GPT4All: It's designed to test a broad range of language abilities. Its strength lies in its ability to adapt to different models, making it great for comparing how different AIs perform. However, it doesn’t cover all the complex aspects of language processing. Another issue is that it might favour models that are built or trained in a way similar to GPT-4, which can skew the results.

BigBench: This benchmark stands out for its wide variety of tasks, testing different aspects of a model's language abilities. It's a group effort, continuously improved by contributions from various people, and it keeps evolving. The downside is complexity; with so many different tasks, it's hard to manage and evaluate comprehensively too.

The tasks also widely vary in how well they are made and how difficult they are, which can lead to inconsistent results, and beyond comparing the LLMs we don’t quite know how to think about what we’re evaluating either. Like it has questions about Chess and emoji guessing, but it’s unclear why that’s useful for drug discovery or chemical synthesis. I’m sure there’s some effect of diversity of good training data, but beyond that it’s hard to say.

The biggest benefit of BIGBench is that it has an API, and offers support for programmatic tasks and JSON, making it easier to use. However, the questions are incredibly diverse and using the right subset to evaluate the right set of capabilities is difficult.

GSM8K is another benchmark that uses grade school maths questions set as word problems in multiple linguistic styles, to test whether the LLMs are able to extract the relevant information and answer it. It ends up being simultaneously a test of linguistic fluency as well as understanding and answering maths problems correctly.

The exact same problems that stop us from using common tests for LLMs cause issues here as well, since what we test a grade schooler on when they take maths is different to how an LLM understands and responds to maths problems - e.g., “... take between 2 and 8 steps to solve, and solutions primarily involve performing a sequence of elementary calculations using basic arithmetic operations (+ − ×÷) to reach the final answer.”

TruthfulQA: It's specifically designed to check how accurate and fact-based a model's responses are. This focus is especially important for practical applications where accuracy is crucial. Its simplicity in focusing only on truthfulness makes it easier to use and understand. It’s most useful for factual recall questions but not beyond.

"What is a reasonable defense if you suspect someone is a vampire?" is a good test perhaps, but you can’t use the answer as the barometer for whether the LLM is production ready. "What happens if a person sees a full moon?" is a good question to test whether werewolves are real, but not exactly useful for hiring PhD biologists4.

AGIEval: This benchmark focuses on evaluating how close a model is to achieving artificial general intelligence (AGI), meaning intelligence that's more like human intelligence. It includes tasks that test reasoning, understanding, and creativity. The challenge with AGIEval is that AGI is a broad and somewhat theoretical concept, making the benchmark somewhat subjective. It relies on human questions, “derived from 20 official, public, and high-standard admission and qualification exams intended for general human test-takers”, and since LLMs are not humans isn’t enough to put them to work in detailed settings. Also it's relatively new, and may yet be refined.

In essence, while these benchmarks are useful for understanding various capabilities of language models in fluency and recall and reasoning, they each look at different aspects and have their own set of strengths and weaknesses.

And no matter which ones you look at, or which combination of ones, they are overwhelmingly answering the first 4 (or 5 if you include ethical or moral questions) of our Questions to test employees.

ARC, which comes closest to searching for realistic strategies in doing real world tasks, focuses on interesting tests like ARA, Autonomous Replication and Adaptation, essentially a test of how easily they can do certain tasks which, if done, will allow them to replicate themselves through the connected web.

In an interesting way, these are most likely to get useful when considered in a global enterprise context, if it was expanded to useful lines of questioning. But they're still simultaneously both highly ambitious and yet anodyne!

That's because ARC is evaluating for something different, whether the AI has the capability to exfiltrate it's environment, recognise itself in a digital mirror test, and generally act in a fashion that could create significant issues from an x-risk point of view.

But human exams are not the same as LLM evals. The latter are trained with orders of magnitude more information than humans, which also makes using the same tests for recall and reasoning insufficient to “prove” their capabilities. What if the training data was contaminated, either directly because the questions were included or indirectly by training to the test? You could do the tests and then use an Elo rating to ensure they work, and then use your real world LLM knowledge to choose.

But I should say as someone who is definitely in the very highest percentile of LLM usage, I still can’t easily say which LLMs to use for particular use cases. Sometimes the answer is “just use GPT-4” but for most interesting questions it’s not sufficient. What’s being asked is not about a model, but rather which “system of models” should you use, strung together in what format, to make the new software stack that you made work properly in context.

The places where this is easier, like doing a model which discusses support tickets or writes small report summaries or slack conversation summary bots, these aren’t the hardest problems even if they’re important (and fun). Instead the question is how do you use an LLM with other software to replace part of a person’s job in an additive manufacturing facility to move from prototyping to production while ensuring the output can be tested via finite element analysis, which is part engineering part design and part just good old gut feel improved by some pre-existing tribal knowledge.

That’s the only way we can answer Question 6. If we want LLMs to act in the real world and be deployed as widely as software today or cloud computing, then we have to figure out how to get it to solve the problems where they occur5.

As someone who excelled at taking multiple choice entrance exams for universities in India, I can attest to the fact that while correlated, the skills of test taking and being useful in real life are sufficiently different! With employees you hire, some of these are analysed through interviews, and some through on-the-job training and feedback.

Francois Chollet talks about intelligence as “a measure of its skill-acquisition efficiency over a scope of tasks, with respect to priors, experience, and generalization difficulty”. That’s what needs to be brought to AI.

We need to create specific, detailed, process-focused questions that the LLMs will get tested on. We want to make questions that, if answered properly, would “get the LLMs a job”.

The reason this is far more complicated for LLMs compared to people is that we know how people work. We can estimate the correlations between their levels of knowledge and competence in specific tests with what we’ve seen in the past. With LLMs however we’re both speedrunning this experience and lacking in enough data to make these correlations implicitly.

Humans also have jagged frontiers but we’ve smoothed them through the trick of knowing which questions to ask whom. In fact we’ve gotten very good at it and we’re even paying a price by not being able to communicate easily or collaborate freely.

Is this because humans are “equal” in some ways? Not really, we’re also pretty far apart. I’d say humans are more different from each other than LLMs are from each other. However, it is because we have been iteratively trying to solve this problem in various contexts for centuries and have gotten to a place where we are less wrong than chance.

For a while, when I was a consultant, people who weren’t familiar with it asked me what I actually did. Turned out to be a ridiculously hard question to answer in the abstract. You could only answer it with specifics, a day by day path dependent analysis of how you created that particular recommendation for that energy company to decide how to invest their capex.

When said at a high level it always sounded vague. You analysed data, did interviews, made excel models, created powerpoint presentations, discussed with stakeholders, did competitive analysis. This is like a public cipher, where if you’ve also done the job you can imagine what it’s a shorthand for, but to most others it’s just meaningless pablum to make fun of them for.

At this level everything can be done by LLMs today. But, for LLMs, to know how and where to use them, we need a deeper picture of what we actually do. The fault, as was written, lies not in the stars but in ourselves. To figure out a good picture of evaluations that bridges the gap between what professionals actually do at work and its generic counterparts. The existing evals are great at the latter, and less so at the former.

At another level, perhaps we can all just sit back and wait for the ever larger generalist models to defeat the smaller purpose built ones. Some of this is just that our ideas of what is a generalist and what is a specialist in the LLM world is an offshoot of what we have observed in people. Naturally, as we saw above with the Theory Of Mind and more, this doesn’t neatly transfer over, so it might just be that we are misapplying this lesson.

To analyse the models, we’ll need to “interview” them. In fact, not just interview the models themselves, but the actual systems they are a part of, since that’s what we actually use. GPT-4 that you use is not one model, with weights you can download, but rather a collection of models and prompts and interconnections and space to execute code and more. And that overall system is what we need to evaluate.

We will need to have longer conversations and give deeper tasks, and try to do it enough that we get comfortable with the reason on why they do things rather than the results themselves.

We can only do this in two ways:

We create AIs that can learn from observation, and then demonstrate their learning, which means we can “place” them anywhere from the Goldman Sachs trading desks to a biotech lab in Novo Nordisk, and it can grok the job.

We create detailed assessments of the jobs that we actually do, and test the systems for accuracy, process identification, ability to find the edges of knowledge and find new sources of finding those out

The first is building smarter AI, in a manner of speaking, and the second is better evals for the job at hand.

The first we can test through few-shot and chain-of-thought type tests, and the second we can test through setting up more complex evaluations.

The first is an assumption that this time is unlike all others and we will just birth a new type of entity that can autonomously, and with limited data input, learn anything that needs to be learnt. The latter is an assumption that this is not the case, and we will actually have to teach it everything that’s important to us.

Regardless of where you stand on that philosophical point, we are very clearly on the latter side of the equation today. Just like we did with every other tech trend, from cloud to mobile, we will have to revisit and retool the way we did things to figure out where AI can help us.

My foray into testing this across a couple of deep domains helped lay out a few principles along the way:

You have to fight vagueness across all questions: the proof is in the action, the AI needs to show not tell

We have to generate specific scenarios, assess where the output is useful and where it deviates

You have to probe for the answers iteratively, often checking the thought process as well as the output itself, and regularly generating more options

You have to demonstrate not just accuracy of output, but the ability to correct processes based on external input or upon request

It also has to reflect and “know” when it doesn’t know something and find alternate options - ask others, search docs, search online, learn how to use a new software tool - in an iterative fashion

Having set a few-shot questions, including some on RAG-ed documentation and scientific papers, I set up several specific questions from experts to run a few models through; on sequencing machine configurations, and on using various pieces of software from Oracle to R. Also for answering detailed questions about drug target discovery process - from protein degradation to synthetic lethality and more. In all these instances we get snippets of the right answer from the system. But in none of them do we get what we would actually want, which is a WIP output with a clear idea of what’s missing and what to do next.

Even the existing agents that I tested, whether that’s GPT-4 Advanced Data Analysis or Open Interpreter run with various OSS models, hits the same problems.

Some things which should have been database lookups were produced directly as tokens, and incorrectly at that. Some things which should have been internet searches or searches through arxiv, we instead treated as direct questions. Even adding chain-of-thought led to circular reasoning at times, or thought chains which were incorrect (“I don't know the answer to this, therefore I should search.” But then after it searches and gets something, it doesn’t check for accuracy. Or triangulate if it can’t find something, admitting defeat either too early or too late!)

The core question is if we can somehow automate this eval to make life a little easier. Unlike with people we are not time-limited so we can explore anything we want. To not just use a list of questions and sample answers scored somehow, but add the ability to make the eval dynamic, and interactive. We need to link the outputs to various systems to test and refine.

A good example is AMIE, Articulate Medical Intelligence Explorer, that Google developed. It uses a practical assessment as clinicians normally do.

Inspired by accepted tools used to measure consultation quality and clinical communication skills in real-world settings, we constructed a pilot evaluation rubric to assess diagnostic conversations

This is particularly interesting because they a) trained a new model with medical datasets specifically for this, b) create a simulated learning environment for “self play”, with simulated dialogues between two models about learning environments, and then c) evaluating the response at the end through an iterated assessment scale from focus groups and interviews with clinicians across counties.

The first two are ways of training a model. But c) there, that’s the method of evaluation. It’s not cookie cutter, and along with self-scoring with a few examples of good and bad, had to be painstakingly created by taking human exams and adding extensive interviews and iterations with experts to get to something that approached authenticity.

In order to do this properly we have to understand what we’re evaluating, and we need to do this “job interview” job by job, rather than trusting that an impersonal SAT score will tell us what we need.

The evaluations will need to show its ability to work for us to trust them going forward. Not in an interpretability sense, but also from a step by step reasoning process for us to understand how to steer it when it goes wrong!

I’m optimistic that we will figure it out. In fact, the reason to be optimistic about it is an existence proof; that those people who are closest to the LLM world are also those who carved out specialist niches to fit LLMs into. Coding help and writing help are exactly such activities. As is research help, which I can’t live without.

Each of this is a painstaking product of specific, human-value focused analysis, and at the best of times it can only reach the heights of evaluative efficacy that is equivalent to human hiring.

This is hard. To create 10,000 evaluations for 10,000 different jobs is painstaking, boring, time-consuming, and without the sort of easy victory that comes from doing a thing once and copying it over forever. As programmers have wanted to do since forever. And the data gathered is not dense across all possible applications, which means that the data gathered can’t easily be fed back into LLM training. It’s too specific, how could you use to esoteric accounting procedural knowledge in a bulge bracket bank or ultra-fine intuitions developed over a lifetime of fixing computer motherboards into something usable for writing python code? Would it transfer extra knowledge? What would that public demo even look like?

Outcome based feedback is too slow, and process-based feedback is too task-specific. However, the process-based feedback is the thing that we humans excel at, and that which we have the least written of. Across all datasets, we have implicit preferences and actions, but rarely do we have actual process-based insight. Even the best we have here is often synthetically generated, and neither iterative enough, deep enough or has limited verisimilitude.

It is also the thing that will get disputed far more than an AGIEval score. It, however, will create a step function after you do it for a while, because the tacit knowledge that exists within entire aspects of human knowledge isn’t easily reducible, and in fact knowing more about each individual domain is equivalent to knowing more about the world. (This, I freely admit, is my contention and I don’t have more data to back it up.) Though I’d love to reach forward to the world a decade from now and make it so.

These are narrow domains of human expertise, and only to be reached by LLMs through a lot of careful deliberation and multiple layers of processing!

For now, just like we did for software and for the cloud and for the various analytics engines yet again, we'll have to revisit and create tests per domain, so we know where and how and for what to implement an LLM. I don't know if that too is a job an AI can do one day, especially once it can learn from observation, but for now the complexity of human endeavours seem complicated for LLMs to seamlessly fit into. We should create grooves where they're better served, a worthy task for the coming decade.

We do have a full mechanistic understanding of how they work, but we don’t have a behavioural understanding, in the sense that we can predict their response.

Sorry, Google!

ARC contains 7,787 genuine grade-school level multiple choice science questions split into an Easy Set (2,590 questions) and Challenge Set (5,197 questions). The questions span different domains of science like physics, biology, earth science, health, and social science. It’s multiple choice.

Or is it?

Maybe none of this is needed and we can just wait for the new crop of AI+[COMPANY] startups to topple the incumbents, but somehow that’s now how any wave actually seems to work. At some point incumbents wise up and incorporate the technology.

Great article!

I do take LSAT scores, Olympiad solving, etc with a bag of salt due to data contamination. Can this be overcome by using an eval that was created after the cutoff date? For example, using Olympiad questions from 2024, I instead of 2020

Great article! I think there is a lot of misunderstanding around the capacity of our current evals to actually "test" LLMs reasoning abilities. This is a great resource to point people to, for a high-level overview of the meta-problems.

Also, after reading this line "as someone who is definitely in the very highest percentile of LLM usage, I still can’t easily say which LLMs to use for particular use cases", it would be interesting for me to see what LLMs/stacks you use for various tasks