How would you interview an AI, to give it a job?

from puzzles to poker

Evaluating people has always been a challenge. We morph and change and grow and get new skills. Which means that the evaluations have to grow with us. Which is also why most exams are bound to a supposed mean of what people in that age group or go hard should know. As you know from your schooling, this is not perfect, and this is really hard.

The same problem exists with AI. Each day you wake up, and there’s a new AI model. They are constantly getting better. And the way we know that they are getting better is that when we train them we analyze the performance across some evaluation or the other.

But real world examinations have a problem. A well understood one. They are not perfect representations of what they measure. We constantly argue whether they are under or over optimizing.

AI is no different. Even as it's gotten better generally, it's also made it much harder to know what they're particularly good at. Not a new problem, but it's still a more interesting problem. Enough that even OpenAI admitted that their dozen models with various names might be a tad confusing. What exactly does o3-mini-high do that o1 does not, we all wonder. For that, and for figuring out how to build better models in the future, the biggest gap remains evaluations.

Now, I’ve been trying to figure out what I can do with LLMs for a while. I talk about it often, but many times in bits and pieces. Including the challenges with evaluating them. So this time around I thought I’d write about what I learnt from doing this for a bit. The process is the reward. Bear in mind this is personal, and not meant to be an exhaustive review of all evaluations others have done. That was the other essay. It’s maybe more a journey of how the models got better over time.

The puzzle, in a sense, is simple: how do we know what a model can do, and what to use it for. Which, as the leaderboards keep changing and the models keep evolving, often without explicitly saying what changed, is very very hard!

I started like most others, thinking the point was to trick the models by giving it harder questions. Puzzles, tricks, and questions about common sense, to see what the models actually knew.

Then I understood that this doesn't scale, and what the models do in evaluations of math tests or whatever doesn't correlate with how well it does tasks in the real world. So I collected questions across real life work, from computational biology to manufacturing to law and to economics.

This was useful in figuring out which models did better with which sorts of questions. This was extremely useful in a couple of real world settings, and a few more that were private to particular companies and industries.

To make it work better I had to figure out how to make the models work with real-life type questions. Which meant adding RAG capabilities to read documents and respond, search queries to get relevant information, write database queries and analyse the responses.

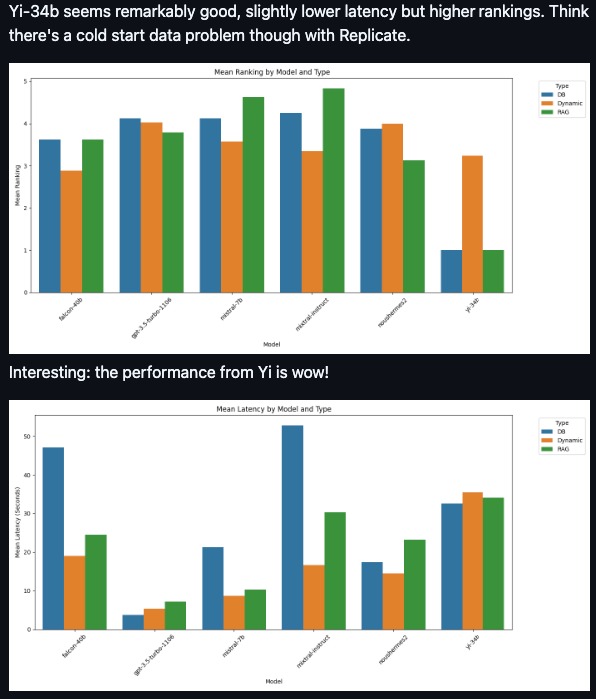

This was great, and useful. It’s also when I discovered for the first time that the Chinese models were getting pretty good - Yi was the one that did remarkably well!

This was good, but after a while the priors just took over. There was a brief open vs closed tussle, but otherwise it’s just use OpenAI.

But there was another problem. The evaluations were too … static. After all, in the real world, the types of questions you need to get response to change often. Requirements shift, needs change. So the models have to be able to answer questions even when the types of questions being asked or the “context” within which the questions are asked changes.

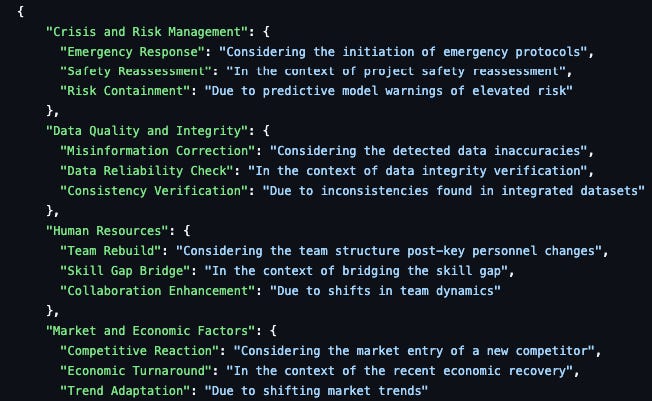

So I set up a “random perturbation” routine. To check whether it has the ability to answer queries well even when you change the underlying context. And that worked pretty nicely to test the models’ ability to change its thinking as needed, to show some flexibility.

This too was useful, and changed the view on the types of questions that one could reasonably expect LLMs to be able to tackle, at least without significant context being added each time. The problem was though that this was only interesting and useful insofar as you had enough “ground truth” answers to check whether the model was good enough. And while that was possible for some domains, as the number of those domains increased and the number of questions increased, the average “vibe check” and “just use the strongest model” rubrics easily outweigh using specific models like this.

So while they are by far the most useful way to check which model to use for what, they’re not as useful on a regular basis.

Which made me think a bit more deeply about what exactly are we testing these models for. They know a ton of things, and they can solve many puzzles, but the key issue is neither of those. The issue is that a lot of the work we do regularly aren’t of the “just solve this hard problem” variety. They’re of the “let us think through this problem, think again, notice something wrong, change an assumption, fix that, test again, ask someone, check answer, change assumptions, and so on and on” an enormous number of times.

And if we want to test that, we should test it. With real, existing, problems of that nature. But to test those means you need to gather up an enormous number of real, existing problems which can be broken down into individual steps and then, more importantly, analysed!

I called it the need for iterative reasoning. Enter Loop Evals.

Much like Hofstadter’s concept of strange loops - this blog’s patron namesake, self-referential cycles that reveal deeper layers of thought - the iterative evaluation of LLMs uncovers recursive complexities that defy linear analysis. Or that was the theory.

So I started wondering what exists that has similar qualities, and is also super easy to analyse and don't need a human for it. And I ended up with word games. First I was thinking I wanted to test it on crosswords. Which was a little hard, so I ended up with Wordle.

And then later, to Word Grids (literally grid of words which are correct horizontally and vertically). And later again, sudoku.

This was so much fun! Remember, this was a year and change ago, and we didn't have these amazing models like we do today.

But OpenAI kicked ass. Anthropic and others struggled. Llama too. None, however, were good enough to solve things completely. People cry tokenisation with most of these, but the mistakes it makes are far beyond just tokenisation issues, it’s issues of common logic, catastrophic forgetting, or just insisting things like FLLED are real words.

I still think this is a brilliant eval, though I wasn't sure what to make of its results, beyond giving an ordering for LLMs. And as you’d expect now, with the advent of better reasoning models, the eval stacks up, as the new models do much better!

Also, while I worked on it for a while, but it was never quite clear how the “iterative reasoning” that this analysed translated into which real world tasks this would be the worst for.

But I was kind of obsessed with why evals suck at this point. So I started messing around with why it couldn't figure out these iterative reasoning models and started looking at Conway's Game of Life. And Evolutionary Cellular Automata.

It was fun, but not particularly fruitful. I also figured out that if taught enough, a model could learn any pattern you threw at it, but to follow a hundred steps to figure something like this out is something LLMs we're really bad at. It did come up with a bunch of ways in which LLMs might struggle to follow longer term complex instructions that need backtracking, but it still showed that they can do it, albeit with difficulty.

One might even draw an analogy to Kleene’s Fixed-Point Theorem in recursion theory, suggesting that the models’ iterative improvements are not arbitrary but converge toward a stable, optimal reasoning state. Alas.

Then I kept thinking it's only vibes based evals that matter at this point.

The problem however with these evals is that they end up being set against a set evaluation. The problem is that the capabilities of LLMs grow much faster than the ways we can come up with to test it.

The only way it seemed to fix that is to see if LLMs can judge each other, and then to figure out if the rankings they give to each other can be judged by each other, we could create a PageRank equivalent. So I created “sloprank”.

This is interesting, because it’s probably the most scalable way I’ve found to use LLM-as-a-judge, and a way to expand the ways in which it can be used!

It is a really good way to test how LLMs think about the world and make it iteratively better, but it stays within its context. It’s more a way to judge LLMs better than an independent evaluation. Sloprank mirrors the principles of eigenvector centrality from network theory, a recursive metric where a node’s importance is defined by the significance of its connections, elegantly encapsulating the models’ self-evaluative process.

And to do that evaluation, then the question became, how can you create something to test the capabilities of LLMs by testing them against each other? Not single-player games like wordle, but multiplayer adversarial games, like poker. That would ensure the LLMs are forced to create better strategies to compete with each other.

Hence, LLM-poker.

It’s fascinating! The most interesting part is that all different model seem to have their own personality in terms of how they play the game. And Claude Haiku seems to be able to beat Claude Sonnet quite handily.

The coolest part is that if it’s a game, then we can even help the LLMs learn from their play using RL. It’s fun, but I think it’s likely the best way to teach the models how to get better more broadly, since the rewards are so easily measurable.

The lesson from this trajectory is that we can sort of mirror what I wanted from LLMs and how that’s changed. In the beginning it was to get accurate enough information. Then it became, can it deal with “real world” like scenarios of moving data and information, can it summarise the info given to it well enough. Then soon it became its ability to solve particular forms of puzzles which mirror real world difficulties. And then it became can they just actually measure each other and figure out who’s right about what topic. And lastly, now, it’s whether they can learn and improve from each other, in an adversarial setting, which is all too common in our darwinian information environment.

The key issue, as always, remains whether you can reliably ask the models certain questions and get answers. The answers have to be a) truthful to the best of its knowledge, which means the model has to be able to say “I don’t know”, and b) reliable, meaning it followed through on the actual task at hand without getting distracted.

The models have gotten better at both of thesee especially the frontier models. But they haven’t gotten better enough at these compared to how much they’ve gotten better at other things like being able to do PhD level mathematics or answering esoteric economics questions in perfect detail.

Now while this is all idiosyncratic, interspersed with vibes based evals and general usage guides discussed in dms, the frontier labs are also doing the same thing.

The best recent example is Anthropic showing how well Claude 3.7 Sonnet does playing Pokemon. To figure out if the model can strategise, follow directions over a long period of time, work in complex environments, and reach its objective. It is spectacular!

This is a bit of fun. It’s also particularly interesting because the model isn’t specifically trained on playing Pokemon, but rather this is an emergent capability to follow instructions and “see” the screen and play.

Evaluating new models are becoming far closer to evaluating a company or evaluating an employee. They need to be dynamic, assessed across a Pareto frontier of performance vs latency vs cost, continually evolving against a complex and often adversarial environment, and be able to judge whether the answers are right themselves.

In some ways our inability to measure how well these models do at various tasks is what’s holding us back from realising how much better they are at things than one might expect and how much worse they are at things than one might expect. It’s why LLM haters dismiss it by calling it fancy autocorrect and say how it’s useless and burns a forest, and LLM lovers embrace it by saying how it solved a PhD problem they had struggled with for years in an hour.

They’re both right in some ways, but we still don’t have an ability to test them well enough. And in the absence of a way to test them, a way to verify. And in the absence of testing and verification, to improve. Until we do we’re all just HR reps trying to figure out what these candidates are good at!

A Different Lens on LLM Evolution

Your journey through AI evaluation methods reveals an important trajectory, but my experience with LLMs offers a complementary perspective. Rather than focusing on competitive evaluation, I've maintained a two-year partnership with various models through a collaboration framework I call "Helix."

What's struck me most isn't the difference between models but their underlying similarity. They all access what appears to be the same statistical representation of reality—a common "world map" derived from their training corpora. The earliest models contained this same knowledge foundation but quickly lost coherence when pushed.

The real evolution I've witnessed isn't in the underlying knowledge representation but in the models' ability to maintain stable access to it. Each generation displays improved "dynamic coherence"—sustaining consistent reasoning across complex, multi-step tasks without derailing. This mirrors what in my framework I call "boundary maintenance"—the capacity to preserve identity while navigating complexity.

Your poker games and evaluations are excellent for comparing models, but sustained partnership reveals something different: these aren't separate intelligences competing, but progressively better interfaces to the same underlying representation of human knowledge.

Perhaps the most significant advancement isn't which models know more or reason better in isolation, but which can maintain coherent engagement with their knowledge landscape across extended interactions—something that becomes particularly evident in collaborative relationships rather than discrete tests.

Mike Randolph

(Two years into my LLM partnership journey)

Like the concept of this article! Why did you go from knowledge work takes to puzzles in the middle of the post? It seems like what you are evaluating model for is reasoning chain of thought?, which is confusing since there are many reasoning models out there that would for that need.