AI has completely entered its product arc. The models are getting better but they’re not curios any longer, we’re using them for stuff. And all day, digitally and physically, I am surrounded by people who use AI for everything. From being completely integrated into every facet of their daily workflow to those who want to spend their time it's basically chatting or using only command line and everything in between. People who spend most of their time either playing around with or learning about whichever model dropped.

Despite this I find myself almost constantly the only person who actually seems to have played with or is interested in or even likes Gemini. Apart from a few friends who actually work at Gemini, though even they look apologetic most of the time and I-can’t-quite-believe-it-slight-smile the handful of other times when they realise you’re not about to yell at them about it.

This seemed really weird, especially for a top notch research organisation with infinite money, which annoys me, so I thought I would write down why. I like big models, models that “smell” good, and seeing it hobbled is annoying. We need better AI and the way we get them requires strong competition. It’s also a case study, but this is mostly a kvetch.

Right now we only have Hirschmann’s Exit, it’s time for Voice. I’m writing this out of a sense of frustration because a) you should not hobble good models, and b) as AI is undeniably moving to a product-first era, I want more competition in the AI product world, to push the boundaries.

Gemini, ever since it released, has felt consistently like a really good model that is smothered under all sorts of extra baggage. Whether it’s strong system prompts or a complete lack of proper tool calling (it still says it doesn’t know if it can read attachments sometimes!), it feels like a WIP product even when it isn’t. It seems to be just good enough that you can try to use it, but not good enough that you want to use it. People talk about a big model smell, similarly there is a big bureaucracy smell, and Gemini has both.

Now, despite the annoying tone which feels like a know-it-all who has exclusively read Wikipedia, I constantly find myself using it whenever I want to analyze a whole code base, or analyse a bunch of pdfs, or if I need to have a memo read and rewritten, and especially if I need any kind of multimodal interaction with video or audio. (Gemini is the only other model that I have found offers good enough suggestions for memos, same as or exceeding Claude, even though the suggestions are almost always couched in the form of exceedingly boring looking bullet points and a tone I can only describe as corporate cheerleader.)

Gemini also has the deepest bench of interesting ideas that I can think of. It had the longest context, multimodal interactivity, ability to use it to actually keep looking at your desktop while you chat to it, NotebookLM, the ability to directly export in the documents into Google Drive, the first deep research, learn LM something specific to actually learn things, and probably 10 more things that I'm forgetting but were equally breakthrough that nobody uses.

Oh and Gemini live, the ability to talk live with an AI.

And the first reasoning model with visible thinking chain of thought traces in Gemini flash 2.0 thinking.

And even outside this Google is chock full of extraordinary assets that would be useful for Gemini. Hey Google being an example that seems to have helped a little bit. But also Google itself to provide search grounding, the best search engine known to planet. Google scholar. Even news, a clear way to provide real-time updates and have a proper competitor to X. They had Google podcasts which they shuttered, unnecessarily in my opinion, since they they could have easily just created a version that was only based off of NotebookLM.

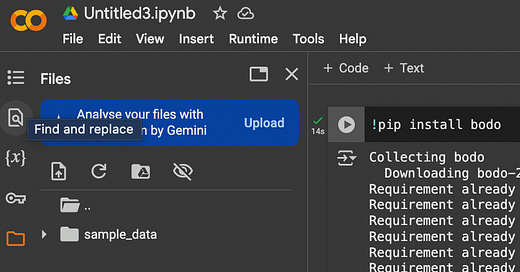

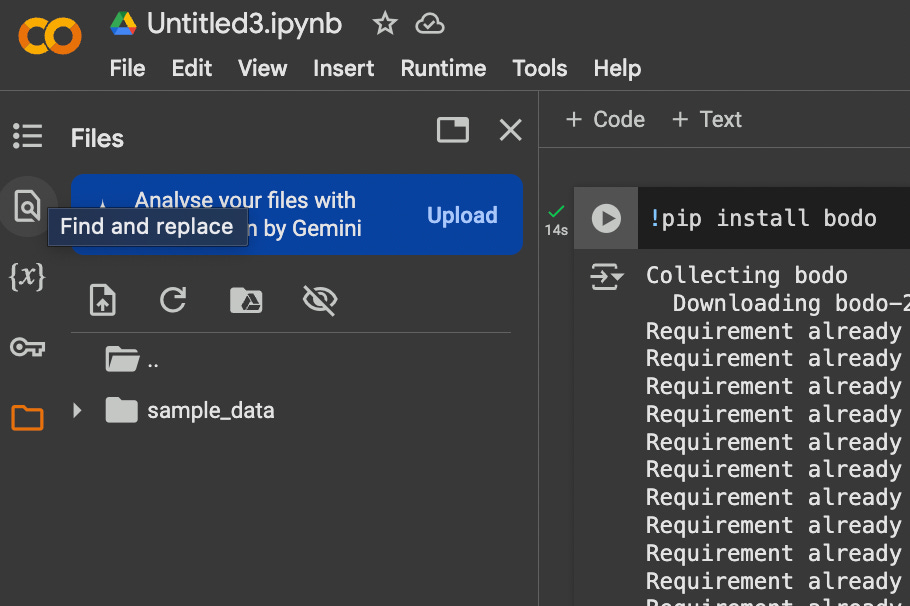

Also Colab, an existing way to write and execute python code and Jupiter notebooks including GPU and TPU support.

Colab even just launched a Data Science agent, with Colab, which seems interesting and similar to the Advanced Data Analysis. But true to form it’s also in a separate user interface, in a separate website, as a separate offering. One that’s probably great for those who use it, which is overwhelmingly students and researchers (I think), one with 7 million users, and one that’s still unknown to the vast majority who interact with Gemini! But why would this sit separate? Shouldn’t it be integrated with the same place I can run code? Or write that code? Or read documents about that code?

Colab even links with your Github to pull your repos from there.

Gemini even has its Code Assist, a product I didn’t even know existed until a day ago, despite spending much (most?) of my life interacting with Google.

Gemini Code Assist completes your code as you write, and generates whole code blocks or functions on demand. Code assistance is available in many popular IDEs, such as Visual Studio Code, JetBrains IDEs (IntelliJ, PyCharm, GoLand, WebStorm, and more), Cloud Workstations, Cloud Shell Editor, and supports most programming languages, including Java, JavaScript, Python, C, C++, Go, PHP, and SQL.

It supposedly can even let you interact via natural language with BigQuery. But, at this point, if people don’t even know about it, and if Google can’t help me create an entire frontend and backend, then they’re missing the boat! (by the way, why can’t they?!)

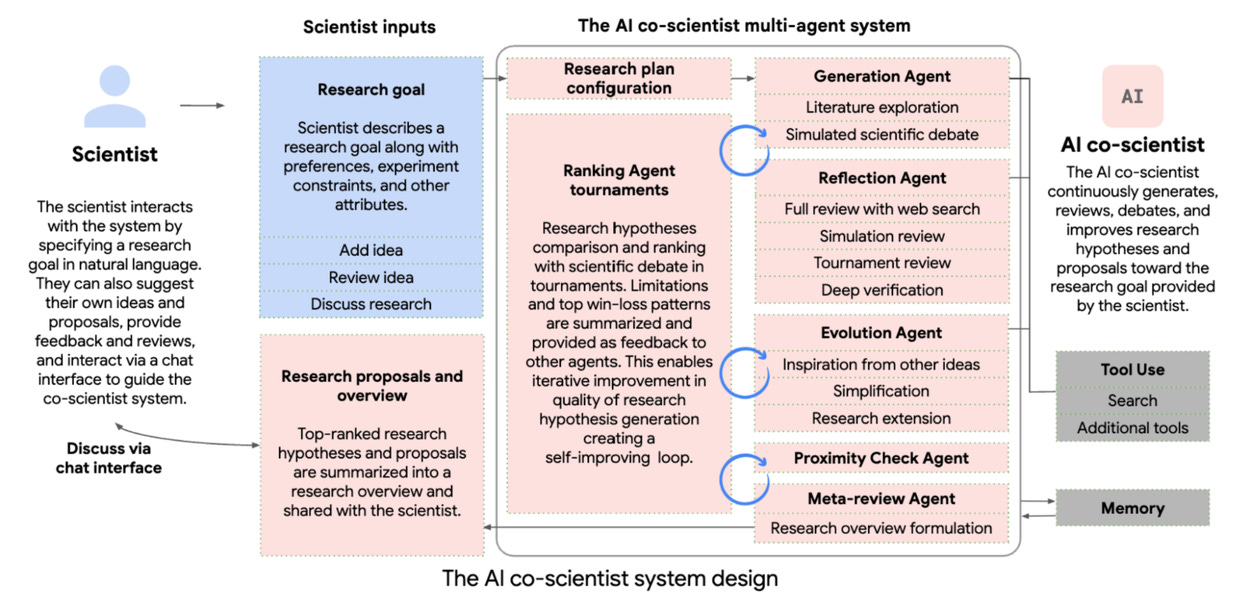

Gemini even built the first co-scientist that actually seems to work! Somehow I forgot about this until I came across a stray tweet. It's multi agent system that generates scientific hypotheses through iterated debate and reasoning and tool use.

Gemini has every single ingredient I would expect from a frontier lab shipping useful products. What it doesn’t have is smart product sense to actually try and combine it into an experience that a user or a developer would appreciate.

Just think about how much had to change, to push uphill, to get a better AIStudio in front us, arguably Gemini’s crown jewel! And that was already a good product. Now think about anyone who had to suffer through using Vertex, and these dozens of other products, all with its own KPIs and userbase and profiles.

I don’t know the internal politics or problems in making this come about, but honestly it doesn’t really matter. Most of the money comes from the same place at Google and this is an existential issue. There is no good reason why Perplexity or Grok should be able to eat even part of their lunch considering neither of them even have a half decent search engine to help!

Especially as we're moving from individual models to AI systems which work together, Google's advantages should come to fore. I think the flashes of brilliance that the models demonstrate are a good start but man, they’ve got a lot to build.

Gemini needs a skunkworks team to bring this whole thing together. Right now it feels like disjointed geniuses putting LLMs inside anything they can see - inside Colab or Docs or Gmail or Pixel. And some of that’s fine! But unless you can have a flagship offering that shows the true power of it working together, this kind of doesn’t matter. Gemini can legitimately ship the first Agent which can go from a basic request to a production ready product with functional code, fully battle-tested, running on Google servers, with the payment and auth setup, ready for you to deploy.

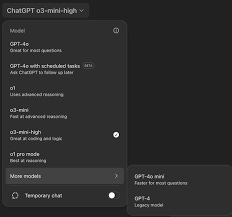

Similarly, not just for coding, you should be able to go from iterative refinement of a question (a better UX would help, to navigate the multiple types of models and the mess), to writing and editing a document, to searching specific sources via Google and researching a question, to final proof reading and publishing. The advantage it has is that all the pieces already exist, whereas for OpenAI or Anthropic or Microsoft even, they still need to build most of this.

While this is Gemini specific, the broad pattern is much more general. If Agents are to become ubiquitous they have to meet users where they are. The whole purpose is to abstract the complexity away. Claude Code is my favourite example, where it thinks and performs actions and all within the same terminal window with the exact same interaction - you typing a message.

It’s the same thing that OpenAI will inevitably build, bringing their assets together. I fought this trend when they took away Code Interpreter and bundled it into the chat, but I was wrong and Sama was right. The user should not be burdened with the selection trouble.

I don’t mean to say there’s some ultimate UX that’s the be all and end all of software. But there is a better form factor to use the models we have to their fullest extent. Every single model is being used suboptimally and it has been the case for a year. Because to get the best from them is to link them across everything that we use, and to do that is hard! Humans context switch constantly, and the way models do is if you give them the context. This is so incredibly straightforward that I feel weird typing it out. Seriously, if you work on this and are confused, just ask us!

Google started with the iconic white box. Simplicity to counter the insane complexity of the web. Ironically now there is the potential to have another white box. Please go build it!

PS: A short missive. If any of y’all are interested in running your python workloads faster and want to crunch a lot of data, you should try out Bodo. 100% open source: just “pip install bodo” and try one of the examples. We’d also appreciate stars and comments!

Github: https://github.com/bodo-ai/Bodo

Bureaucracy is a way bigger brake on LLM development than most people realize. Particularly when it’s political.

The biggest / funniest Google disconnect that I know about - I just ran across a site that uses the Google Vision API on any still photo you submit, which uses image recognition and/or Gemini (the API has a zillion options and it's hard to determine which of the zillions the site in question is using) to determine emotion, setting, context, likely income, likely politics, and best marketing products and angles.

Me and some friends tried it, it was suprisingly good and accurate.

The thing I found most interesting about it was in a decade plus of using Google products, I've basically never seen a relevant ad.

So more than ten years of emails, documents, video meetings, spreadsheets, social graph analysis, and whatever else got them nothing, but running a single still photo through Vision / Gemini single-shot a much better segmentation?? Crazy.

But if you're interested in trying too, here's the link. No idea what they do with your photo and the inferred data, I assume they collect it in a database for marketing or something even more nefarious.

https://theyseeyourphotos.com/