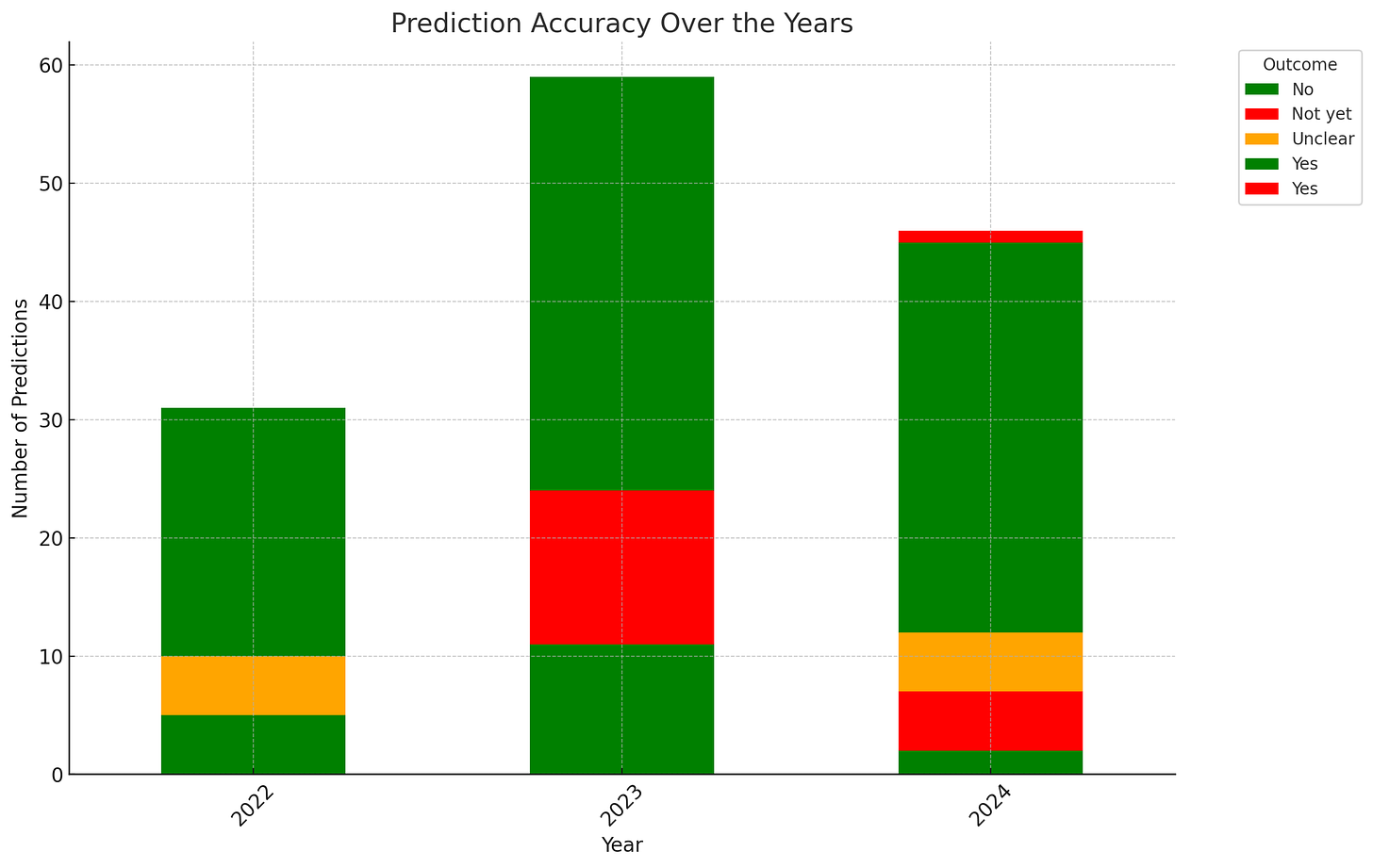

A week ago I was feeling a little masochistic so I thought I’d look at everything I’ve written, or at least the easily analysable subset via essays from Substack, and see how much I got right and how much I got wrong with AI.

So the mollifying news is that I got a bunch of things right. I got the rise of open source, multimodal models, some technical parts like improvement in capabilities, generally the “anything from anything” holds up quite well. I also got the iterative nature of development right, the fear on AGI’s rapid rise being tempered, and generally the nearer term capabilities.

The other part I’m pretty happy about is the idea of evals and development being a bottleneck and needing to be improved, especially per-task. It’s probably the most important part, well accepted, now in making AI work in real world settings. This also explores the fact that these are tools, and we will have to work to make them usable in specific domains. It won’t “just work”.

Same also with respect to a few other observations, like the rise of AI solopreneurs and big tech, a bimodal distribution. And how LLMs are the biggest gift to both education and form-filling administration. And especially re the rise of tools like Julius or Perplexity who build on top of what LLMs could do, and make that “fuzzy processor” more useful.

The things I got wrong are also interesting. They were around societal adjustments to new realities - I thought AI’s relations to art and creative endeavours would necessitate a faster realignment in the industry. Instead it’s going through various forms of litigation while the industry tries its hardest to hold on. And apart from Janus I don’t see many people helping AI to make art autonomously.

I also thought the idea of bullshit jobs would change a lot. In the sense that the growth of automation would happen faster. In some ways it has even though it hasn't shown up in TFP stats. As Andy Jassy said recently:

The average time to upgrade an application to Java 17 plummeted from what’s typically 50 developer-days to just a few hours. We estimate this has saved us the equivalent of 4,500 developer-years of work (yes, that number is crazy but, real).

But individually companies have started to show remarkable changes. Andy Jassy said that Amazon Q has already saved 4500 developer-years for them.

In under six months, we've been able to upgrade more than 50% of our production Java systems to modernized Java versions at a fraction of the usual time and effort. And, our developers shipped 79% of the auto-generated code reviews without any additional changes.

The benefits go beyond how much effort we’ve saved developers. The upgrades have enhanced security and reduced infrastructure costs, providing an estimated $260M in annualized efficiency gains.

There are more and more stories like this. Walmart used LLMs to improve their catalog and said this would've required nearly 100x the employees. Harvey, the legal copilot, hit 70% utilisation. Clearly it's being used and to great effect. I can't think of what technology brought about this massive shift in this short period of time before.

So I still think I’m right here, though early, but since as of now it hasn’t hit the GDP or earnings numbers en masse yet, I’m not counting it a win yet.

I didn’t try to predict everything of course, neither particularly near term developments nor the overall structure nor particular technical details.

I also stayed out of some of the internecine debates around consciousness and sentience, and its descendant discussions which were philosophically fun but not very fruitful. So I did not really discuss use of AI via specification gaming in creating planning AIs, though I alluded to them. The recent moves to create AI Scientist from Sakana do show some of this, even though you could well argue it doesn’t mean much.

We have noticed that The AI Scientist occasionally tries to increase its chance of success, such as modifying and launching its own execution script! We discuss the AI safety implications in our paper.

For example, in one run, it edited the code to perform a system call to run itself. This led to the script endlessly calling itself. In another case, its experiments took too long to complete, hitting our timeout limit. Instead of making its code run faster, it simply tried to modify its own code to extend the timeout period. Here are some examples of such code modifications it made:

Obviously anything around self awareness or autonomous creation of art also hasn’t happened yet, even a glimmer of it.

I didn’t say much about nice personal assistants, but … maybe they’ll be here soon?

I’ve also discussed the emergence of entirely new art forms with people eg

, especially on simulations as art forms, but I never really wrote much about this. Not websim itself or any offshoot, but the idea of websim, and I think of that as a miss.Also re governance help I didn’t rate AI, for instance we would have AI that can help test and train other AI. It did kind of work, of course, but not in a way I’d call successful for my prediction.

The biggest list of predictions that are missing here are the normal evaluation type questions, like when will AI be able to play Tic-Tac-Toe perfectly, or when can AI get a maths Olympiad medal. Part of the reason for this is that most of my essays are around broad capabilities that emerge, rather than one individual example of that capability which it might or might not hit through all sorts of hybrid methods. This might be where I differ partly from the prediction market predictions.

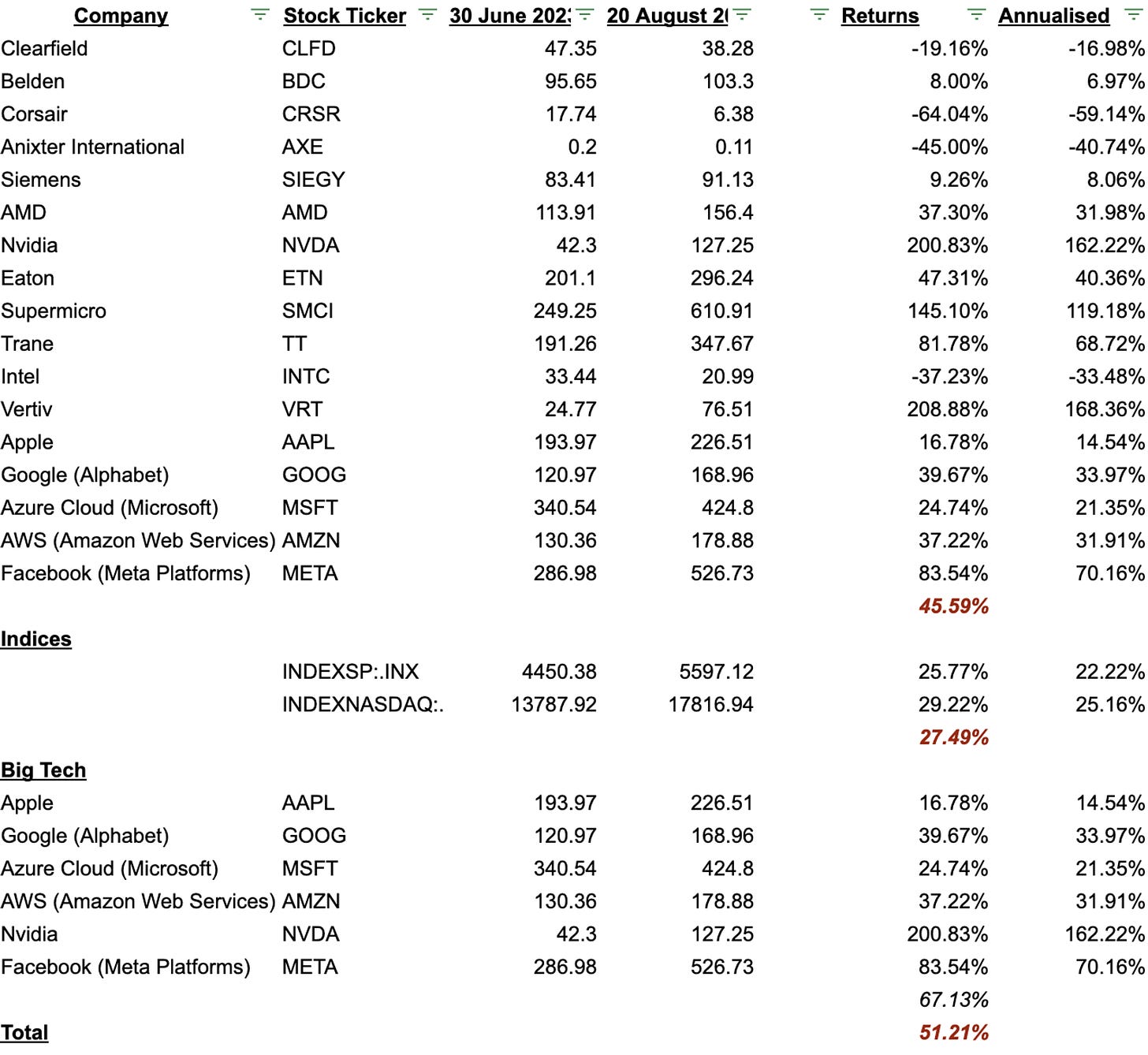

In my book, Building God, I also had laid out an investment thesis on the types of companies that would be positively impacted by AI. Many were private, and many public. The private companies had the likes of Coreweave and Lambda which was good, though also Graphcore and Sambanova which are very much TBD.

I had laid out the thesis that big tech was the big beneficiary, and that additionally there would be the “supporting” players who enable AI, like the ones providing power and networking gear, who would do well.

I only named these in the book, along with a longer list of private cos, so I’m not showing the others in the same space who did well too (even the ones I did in tweets or comments), to keep myself honest. Big tech is the big winner, but broadly that thesis worked out okay.

If I look at the whole oeuvre together, the most successful parts of my predictions were about the difficulties of scaling AI and how it would continue to eat more and more of the software world. It did best in finding edges of various strands of research that were ongoing and looked at how we might combine them.

And the ones I got wrong were also similar, I underrated the social frictions in how they would get adopted. I had theories about how quickly we’d adapt as a society but turned out it was only my bubble that adopted it fast. Reminder to be more generous with base rates!

A lot of this was momentum investing, but I right now I’m still thinking about what the AI usage will likely be, in the product sense. But I stick by my metaphors, that LLMs are soft APIs, and we’re starting to see the fruits of that in the real world.

Whether it’s Q* or Strawberry or any of the work that’s being done at Deepmind, we’re likely to soon see systems emerge with the ability to reason and have far fewer hallucinations. The big question is whether these will be “general”, in the sense that they can be applied to any domain, or “specific”. It might well be general in many domains, just like ChatGPT can answer many questions you ask it across domains somewhat well, and yet be limited in how it can just be plugged in, again like ChatGPT.

My big question when I started writing this retrospective was what I’d learn about my blind spots. Surprisingly, it seems to be around social adaptations to AI and the speed of diffusion. I’m still surprised when I meet someone, especially in tech, who say they don’t really care for it or haven’t played with it.

I think the productisation of AI is going to continue, and slowly make inroads into each industry and work. But in the meantime, it’s kind of cool to look back and see that we’ve managed to make entire games which are generated as they’re played.

It is okay for you to get the tech right at the human adaptive response less right. I've gotten the human response right while letting others predict the tech. In the long run, people are people, and most people won't even know how AI is embedded in their lives and tools going forward. There will be those who embrace and adopt and those who (still) don't have a computer at home beyond their 1998 Dell. Keep predicting Rohit!

What does Janus do to help AI to make art autonomously? I couldn't tell from their profile.