Punctuated equilibria theory of progress

What if progress in one field might depend on sufficient advances in others

[Part of an ongoing series - one, two, three, four, five, six]

I

In 1972 the eminent scientist Stephen Gould proposed an idea about how evolution works that made a whole bunch of people really mad. The issue was gaps in paleontological records. The parties involved were the religious believers, the atheists who understood evolution, the atheists who thought they understood evolution, and a few other variants.

So the problem was simple. Evolution is true. It happened gradually across a bunch of places and times. And yet the fossil records, which is basically our X-ray vision equivalent, only shows a few "in-between" forms. What gives?

When the Pakicetus decided over a period of time to turn into whales and dolphins, we should see a few forms that show us that transformation happening. If things were spread out our views of transitional fossils should also be the equivalent of a random sampling. And if the process was actually gradual, we shouldn't find evidence of the same type of fossil large gaps of time apart.

Answer one is that yes, it is a random sampling, so maybe all this hullaballoo is just pointless whining. We should thank our lucky stars that we have at least what we see. This has a Zeno's paradox feel to it since there's always at least one more transitional animal to find.

Plus as a personal pet peeve it always feels weird to call certain animals transitional species since evolution isn't directional. It's not like for a few million years some mammals were sighing that they wanted to be whales but just hadn't gotten around to it yet.

The second answer is that maybe there's something systemic going on. If we do see gaps in the fossil records it's worth asking if those gaps are indications of the underlying mechanism, just like analysing the distribution of balls pulled out of urns at random tells us something about the balls-in-urn distribution. And that's kind of what Gould did.

Essentially he, with his colleague Elderedge, proposed that maybe evolution wasn't a gradual process after all as it was understood to be until then. Yes there was gradual accumulation of new characteristics. Yes this involved mutation and natural selection. And yes this happened across multiple scales.

But there's also the fact that these equilibria were fragile at best, present in a form of homeostasis, and disturbed through external events. And these events would give rise to new and rapid forms of speciation.

One way is to think of this as a large number of latent mutations standing poised and ready, and as the environment changes it turns from a relatively steady competitive equilibrium to an evolutionary free-for-all.

That was the theory of punctuated equilibria.

Considering the idea has been roundly mocked by Richard Dawkins and Daniel Dennett clearly the idea sparked more than a few nerves somewhere.

Conclusive proof here is unlikely. But examining the possibility isn't that farfetched. After all, what the theory proposes hinges on two essential points:

Once evolved, species kind of retain their basic shape for a good long while (until extinction in the original elaboration)

Environmental factors create variations in selection pressures upon an organism and force directions onto the evolutionary process

Neither seems particularly controversial. The latter for sure is close to orthodoxy at this point. And the former is a more strictly worded version of something people believe in anyway - with what being in question here is the rate of adaptation at a (so to speak) steady state.

As an external party this is kind of interesting because there doesn't seem to be much a priori reason why only one of these needs to be true. Surely there can be gradual mutations that accumulate when advantageous (or not strictly deleterious), which is how we can get Okapis from Samotheriums, but there can also be massive earth-shattering changes in short periods of time which is how we can get blue whales from wolf-like Pakicetus.

And lo and behold, a paper that tried to model it out found the following:

To test this hypothesis, we developed a novel maximum likelihood framework for fitting Levy processes to comparative morphological data. This class of stochastic processes includes both a gradual and punctuated component. We found that a plurality of modern vertebrate clades examined are best fit by punctuated processes over models of gradual change, gradual stasis, and adaptive radiation.

II

Sometimes it feels like this is also the same in the world of technology, though on slightly compressed timescales. We see long-ish period of stasis, with limited differentiation amongst companies that actually compete and succeed, and occasionally there's a sea-change.

For instance, painting with an extraordinarily broad brush, the late 00s had a resurgence of social media that took it mainstream. Through a combination of mobile phones becoming ubiquitous and data plans becoming globally accessible, the environment changed drastically. And that meant that a new type of industry could emerge.

It's not that the gradual accumulation of knowledge and insights weren't enough to put Friendster and MySpace and Orkut on the map. It's that they weren't able to evolve with the changing environment like Facebook and LinkedIn were.

The trouble with writing about technology and creating examples of industry shifts is of course that it's easy for this to devolve into a boundary drawing competition. What's truly considered a change in the environment vs what's considered an independent company-specific technology innovation vs what's considered a business-model innovation.

But even if all those were partially true, it remains a fact that Facebook had to pivot hard to get ready for mobile. That's at least one external datapoint to validate.

And if negative spaces were an indicator, so is Microsoft's attempts at building and/or buying into the mobile world. Or Google's multiple attempts at buying a way into the social media world.

There's a joke in technology investing that every startup wants to be a platform, and that every feature team inside every tech company wants its feature to also become a platform. Apart from some misunderstandings about what the word platform means, the idea here is also somewhat analogous to the disequilibrium theory.

If you believe that there's a big environmental shift coming (everything's moving to the cloud, AI capabilities will increase faster than everyone thinks, people's data is valuable) then you want to bet on a whole species emerging because of your efforts. At the risk of destroying a metaphor, you want to bet on the rise of mammals, and not just the changes that'll take place to the species Cimolestes.

There's a broader theory applying punctuated equilibrium model to social sciences by Baumgartner and Jones. They state that policies generally only see incremental changes due to all sorts of restraints, explicit and implicit, and that this stickiness only changes at times of large-scale external environmental changes.

Like most overly broad theories it seems it can be applied to anything. For instance, there was research done by Connie Gersick into the evolution of organisational design revealing patterns of change in a bunch of things. To quote:

... compares models from six domains-adult, group, and organizational development, history of science, biological evolution, and physical science-to explicate the punctuated equilibrium paradigm and show its broad applicability for organizational studies.

The analysis showed evidence for punctuated growth across all domains rather than steady, incremental change.

It can even be applied to philanthropy, with the argument that major changes in communities affect organisations that act within those communities and change their whole philanthropic policy. The following quote shows the work:

We develop an institutional perspective to unpack how and why major events within communities affect organizations in the context of corporate philanthropy. To test this framework, we examine how different types of mega-events (the Olympics, the Super Bowl, political conventions) and natural disasters (such as floods and hurricanes) affected the philanthropic spending of locally headquartered Fortune 1000 firms between 1980 and 2006. Results show that philanthropic spending fluctuated dramatically as mega-events generally led to a punctuated increase in otherwise relatively stable patterns of giving by local corporations.

III

In one way, all of this is rather interesting and intriguing, like any change theory in a complex system should be.

In an overly simplistic retelling, it can also devolve into "change happens when things change" tautology which turns out to be annoyingly difficult to argue against.

But taking the slightly more nuanced view here, the insightful part about the theory is that it defines change in two ways. One's the regular change.

Another's the type of change that comes from cataclysmic movements in the environment.

But most critically, you can only affect the second type of change if there's enough latent capability that gets built in through a long enough period of change-accumulation. And when something happens to the world around, the more adaptable parts of those gene pools, and then shakeup helps bring about a new global solution to the problem of "how to fill these evolutionary niches in a semi stable fashion". Quoting from an article that analysed how lethal stress affects e coli cultures:

Cells have several mechanisms that limit the number of mutations by correcting errors in DNA. Occasionally these repair mechanisms may fail so that a small number of cells in a population accumulate mutations more quickly than other cells. This process is known as “hypermutation” and it enables some cells to rapidly adapt to changing conditions in order to avoid the entire population from becoming extinct.

While this is specific to hyper-mutation there's also arguments on how an organism's fitness re adaptation to a new environment drops after the environmental shift, even if they're recovered through subsequent evolution. Phenotypic plasticity might help create an emergency response to a new environment. Periodically stressful conditions also increase the evolutionary rates by increasing the expression of fitness differences.

One way to think of this is as building latent capabilities through the accumulation of beneficial and neutral and non-stupid-harmful traits (or genes or memes or knowledge bytes or other reasonably equivalent information components). So in a time of non-upheaval, you push the boundaries of what's possible by getting better and better adapted to that circumstance. The flip side would be that this makes you pretty ossified and unable to respond in sufficiently nimble fashion to a supposed change.

Regardless of what Karellen would've said about his species, it seems highly unlikely that an evolutionary cul-de-sac would've been reached unless there simply was no space in their genetic code equivalent for further mutations. Or maybe reached a sort of plateau whereby even the smallest mutation in any direction results in a substantially worse organism.

Which seems unlikely. In geographic terms it would be like a species standing atop one of those amazing hills in Greece, Meteora. Only monks with a particularly stringent view of monasticism and knowledge of architecture and love of adventure would even try for it. Falling to death from tall cliffs was a decent way to stop tourists at least until fairly recently.

An argument about why physics seems to be stuck in a mode for more decades than anyone is comfortable with, written by Sabine Hossenfelder says:

The major cause of this stagnation is that physics has changed, but physicists have not changed their methods. As physics has progressed, the foundations have become increasingly harder to probe by experiment. Technological advances have not kept size and expenses manageable. This is why, in physics today, we have collaborations of thousands of people operating machines that cost billions of dollars.

...

But even if you don’t care what’s up with strings and multiverses, you should worry about what is happening here. The foundations of physics are the canary in the coal mine. It’s an old discipline and the first to run into this problem. But the same problem will sooner or later surface in other disciplines if experiments become increasingly expensive and recruit large fractions of the scientific community.

The argument here is that physics is stuck in a rut because it's using old methods of finding and exploring hypotheses which needs a shake-up.

But what if spending $40 billion on colliders is actually the right thing to do, except we can't do too many of those at once? It's exceedingly likely that we don't have the capital or the bandwidth to do experiments if they end up being so costly.

One option, loved by all technologists, is that we'll train Artificial Intelligence to be able to help us squeeze more juice out of the sparse-experiment-stone. It can even help us figure out which hypotheses might be better to test a priori.

Will it work? Like all good research questions, the answer is unclear. But it's interesting that future progress in physics might depend on making progress in computation and information theory.

As usual, xkcd addresses it rather handily.

Even though this only covers one dimension, the fact of the matter is that zooming up a level from platonic mathematics to fundamental quarks and upwards means there's going to be a tremendous amount of overlap in tools and methods, if not thought processes and capabilities.

Since co-dependency of knowledge bases turns out to be rather difficult to tangibly tease out, I looked at two indicators. One way to test is to look at the subjects that an aspiring scientist studies in graduate school. And a second is to look at what physicists who don't end up competing for Nobel prizes do with their lives.

For the first the biggest difference seems to be in the amount of computing that has crept into the curriculum both explicitly and implicitly. I haven't had time to create a full database since every school seems to delight in making their websites impossible to navigate, but random sampling has made this much at least pretty clear.

For instance the mathematics that physics graduates learn has changed over the past decade. They still learn the lovely old fashioned calculus of variations, but they also apply plenty of pure new mathematics. There's also plenty of other changes like tough freshmen classes and then fairly abstruse courses for another two years for a research project.

And those who don't end up becoming academics, which seems the majority and not just because of some offshoot of the bell curve, seem to be reasonably well versed in exactly those techniques that makes them useful in a wide array of fields.

The best explanation I saw was in University of Oregon site, which argued:

Beyond becoming professional scientists, physics students pursuing advanced degrees learn how to solve new problems, especially using mathematical methods of modeling and analysis. The skills you get in an advanced physics degree are useful in any career that involves solving challenging problems, which is to say just about anything.

In my head this was written with a big sigh preceding it. I wish reality were that cinematic.

IV

There is a persistent mythology about the importance of polymaths in pretty much any discipline. Even in this blog in its short life I've quoted John von Neumann more times than is healthy, and is far from the only example. Regardless of the issue with definitions here it's at least unarguable once you go back a couple of centuries to Renaissance Florence.

One observation might be that what seemed polymathic genius in the era of Leonardo da Vinci seems commonplace amongst educated graduate students today, give or take a talent in painting. In fact if you add the ability to make AI enabled art or general digital artwork, the number swells rather considerably. We are all Renaissance level polymaths.

Which means that at any one time you needed to have the knowledge inherent in a large fraction of the world's books to truly advance art or architecture or science. But now those foundations, once high enough to be unattainable to most, has easy on-ramps available. And the spires have gotten steeper.

When Roger Penrose wrote to advance his theory about consciousness being external to known physics he had to get reasonably smart on mathematics and physics (where he arguably had a head start and would later get a Nobel) but also neuroscience and computational theory. It's probably fair to say it got mixed results from people (read both physicists and neuroscientists) who thought quantum computations in brain microtubules can't logically what accounts for consciousness. Similarly his arguments about applying Godel's incompleteness theorem to a computational theory of human intelligence was also roundly criticised by everyone within shouting distance, including mathematicians, computer scientists, philosophers, and pretty much anyone else who felt like it.

Seems like it's not so easy becoming a polymath these days when you compare to what von Neumann and While it's possible that the naysayers are wrong, its worth pointing out that Newton and von Neumann didn't face that particular problem. One way we solve for the difficulty of modern emergence of polymaths who can get to expert level knowledge of multiple fields is to teach some parts of it early.

In fact the rise of interdisciplinary studies in universities seems to point at this very issue that everyone seems happily ensconced in their own ivory tower without being able to make bridges to each other's. And while that sounds like an easily solved issue, turns out to actually transfer real knowledge is much harder. After all nobody who actually lives in an ivory tower likes to schlep all in the way down, find a decent crossing, then work their way back upwards another tower.

It's also somewhat of a business shibboleth that you need cross functional teams to break through silos. Of course if you do it enough end up in a lovely matrix where you get silos in two dimensions instead of one. But that's just solving for a new problem.

The Santa Fe institute is a perfect case in point of what good practice in this attempt looks like. By bringing together researchers from a large number of fields within and outside of pure science, they try to make progress in the field of complexity studies. Since the study of complex adaptive systems effectively is the study of almost all human activity, this is a rather meaty endeavour.

While they've had some really strong contenders for cool research come out, including the work on scaling laws amongst organisms and cities, a full fledged idea of progress still seems elusive. For one thing it's unclear what success looks like for several of the problems and can often only be seen if there's a predictive or explanatory breakthrough.

There's also the wonderfully titled book Bridging Disciplines in the Brain, Behavioral and Clinical Sciences, which has this as a key chapter. For instance:

Up until a few decades ago scientists engaged in these endeavors identified themselves as anatomists, physiologists, psychologists, biochemists, and so on. In 1960 the International Brain Research Organization was founded to promote cooperation among the world's scientific resources for research on the brain. In 1969, the Society for Neuroscience was founded to bring together those studying brain and behavior into a single organization; its membership has grown from 1000 in 1970 to over 25,000 in 2000.

And also:

The National Institute on Aging (NIA) recognized that advances in understanding Alzheimer's disease required the coordinated efforts of neurologists, psychiatrists, neuropathologists, psychologists, neurochemists, molecular biologists, geneticists, and epidemiologists in an interdisciplinary approach to address the neurological, behavioral, familial, and social implications. To address that need, NIA developed a Request for Applications (RFA) for Alzheimer's Disease Research Centers (ADRCs).

That’s one view of how reaching across the scientific spires could actually end up being useful.

V

All that grounding done, one of the conclusions seems to be the case that progress in any area is also a function of progress in a bunch of other areas that might or might not be directly related. If knowing about other subjects is needed to make progress in some, and environmental shocks often can cause the emergence of new combinations and whole new species, and there needs to be a method to make the knowledge accessibility amongst sciences easier, then closing those loops means we’re left with the inevitable costs of progress.

The work done by Patrick Collison and Michael Nielsen also shows the impact of this - that it's just much harder to make progress it feels like. While there's very likely bureaucratic and systemic reasons this is true, it also is possible that the current attempts at finding the next breakthrough just requires more expertise and collaborations because that's just what's needed.

There is a whole article, more likely a book, or even more likely an entire career, that can be built on the back of trying to figure out why this is seemingly getting more difficult by the passing year. The fact that we're throwing money and brains at the problem of making progress and it not happening is an indication that perhaps our binding constraint isn't money or talent.

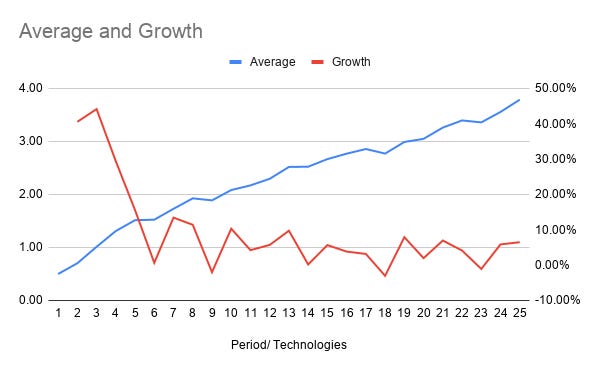

A toy model I'd built to try and see how technology progress in separate fields might give rise to new fields and insights had gotten to a similar conclusion. Bear in mind this also shows slowing growth rates too, since the average grows linearly, with pauses and spurts when stars align.

For instance if we were to look at the potential ways in which science in general is expected to progress, new computational techniques and AI are top of the list. Slightly biased source #1 that's also saying something similar. And slightly biased source #2. And #3. And #4. And #5.

While there's clearly a flavour of the month feeling to some of this, the arguments show one thing for sure. It at least showcases one way by which we might be able to hit the next breakthrough in physics or biology, which might depend upon our ability to reach a breakthrough in computational science.

So we're left with two models to ponder:

The various branches of science all progress roughly together, in relative harmony. If you visualise the growth of science as a time-lapse video of skyscraper going up (and why wouldn't you), you'll see roughly similar growth rates across them. Making connections gets easier and in defiance of normal skyscraper building, as you go up building the next level gets easier because you can leverage the knowledge from all the other skyscrapers together too.

The second model is that there's a heavy random component in how quickly the buildings go up. By chance occasionally they might all grow at the same rates, but more often you'll see times when one grows and another is stalled. In times like those you have to wait for a breakthrough to be realised in one level to be truly applicable to the others.

A priori it's impossible to say why is it that progress in certain fields is harder at times then easier at other times. But our Bayesian prior doesn't need to be that potential for progress in physics in 2010 is comparable to progress in biology in 2020 or progress in AI in 1980. They could all have their own paths which often intertwines with each others making a nicely tangled bit of scientific spaghetti.

Which is effectively the equivalent to the punctuated equilibria theory of progress. If we believe that progress requires us to use all of human knowledge that we possess to push against the constraints of ignorance, then we need to do that. But the fact that we hit patches where the growth seems to lag could also just be because we're in an environmental stasis period. And changing the environment means we need to push something hard enough that we will break through.

It’s also a question of resource allocation. If we simultaneously have the option to spend equally on a bunch of areas which all have vastly different levels of ‘progress potential’, we could. Or we could double down on what’s actually working and see how that might reach a higher plateau if we were to press ahead in full force. We can’t know which is “optimal” of course but the existence of a non-gradual model of scientific progress should make us question whether we’re even formulating the problem of stagnation properly.