Symposium 1: a surfeit of interesting things

Bio-terrorism, AI writing, ecosystem thinking, spotting outliers, billions as the new millions

There’s too many interesting things happening in the world, and have been accumulating too many links to write essays about. So I’m starting a weekly symposium. Hopefully like the penny universities of 18th century coffeehouses where, for the price of a coffee, you could participate in all sorts of stimulating conversation.

Please treat this as an open thread! Do share whatever you’ve found interesting lately or been thinking about, or ask any questions you might have.

To kick off, this week was busy, and we had two Strange Loop essays:

I sort of love this one, I admit. I started writing an essay aimed at AI for executives and investors, and it accidentally turned into a mini-book. This is one part of it - and dives into a literature review to understand what are the research trends ongoing at the moment and what this means for where we will eventually end up.

And if you take it as a blueprint there’s at least a mega unicorn to be built here!

A key aspect of modernity is an increased technological ability to know what’s going on, where and when. And the hope is that with the answers to these being made apparent, we’d get to understand the ‘how’ as well.

However, the increase in verification ability has also meant a decrease in trust. We almost see it as an antiquated concept at times. But the decrease in trust has come with a cost, which is that we forgot why it was important. Because no matter how we create an equation to predict the outcomes from whatever measured variables, there’s an error term, which accounts for a large chunk of things which makes life worth living in the first place!

It’s also something I was curious about before, which is how East India Company managed to run a global empire with only a few dozen people sitting in London, at a time when messages took months to send and oversight was impossible!

1. Delay, Detect, Defend. A policy paper from Kevin Esvelt that looks that the threat from bioterrorism and engineered pandemics in the future, and lays out some thoughts on how to counter it.

The core thesis is that engineered pandemics are getting more feasible, and pandemics are vastly more dangerous than nuclear weapons. Kevin makes a good case for better detection methods and to spruce up things like 100 day plans, because Omicron went from being ID-ed in Africa to infecting 26% of Americans within 100 days.

His thesis to defend basically rests on similar arguments to what we’ve heard for AI existential risk - heavy monitoring for identification of problematic research directions, investing in speed of response capabilities, ban certain experiments like GoF until proven safe(r), make sure USAID and NIH can’t fund virus enhancement work or studies, and stockpile PPEs so we can use it quickly.

There are some really good ideas in there, especially around investing in better ventilation and UV light to kill viruses and bacteria. I find myself looking at the other suggestions as possibly great in an environment where the pan-governmental organisations could work extremely nimbly and coordinate quickly, but I don’t think that’s the world we live in!

I’m also confused about the point of terrorism. He talks about Seiichi Endo, the Aum Shinrikyo zealot who built the Sarin gas for them and tried to get Ebola virus, as the person to watch out for.

Any modern individual with Endo’s educational background and resources could almost certainly obtain Ebola virus by assembling it from synthetic DNA using established protocols; many other viruses are equally accessible

He also mentions Ted Kaczynski, the Unabomber, as an example of someone who did crazy things but was also a brilliant scientist and researcher.

There is one argument I’d love to hear more in these discussions though: Why isn’t there a hell of a lot more terrorism? We have better technology today and it’s far easier to cause chaos if you really wanted to.

My tentative hypothesis is that while “crazy” and “extremely intelligent” do coincide they don’t coincide well enough with “will take action to harm millions” much. We’ve made causing chaos not lucrative and not very interesting for the most part.

That said, the epidemic of school shootings in the US might be an exception. And while we had a few ‘drive a car into a crowd’ type events we just haven’t seen terrorists really care that much about media glory that they imitated other terrorists. It’s a good sociological question, and if we’re worried about various forms of terrorism we really ought to understand how this works!

2. Writing is hard. Erik Hoel writes about AI writing proliferating in media organisations.

While I believe in Sturgeon’s Law, this does make me feel upset. Not just because it mechanises a part of writing at a time when the AI writing just isn’t good enough to replace humans, but because it says something about us as a society and what we value.

I also find it weird, because writing to me isn’t separable from thinking, and in fact seems to be a very particular form of thinking which is different to all others.

For instance, sometimes when I start writing I have this vague feeling of an idea in my mind. Sometimes it’s a phrase, sometimes it’s a sentence, sometimes it’s an image or a graph. It’s inchoate, fleeting, and bound to disappear if I suddenly feel my phone buzz or my doorbell ring. I tell myself that if it’s good it will come back, but this is a belief that helps things move along and not exactly something I know for sure.

But it’s an odd mental fugue state. Recently I started coding again, and one interesting side effect is that the process completely changes how I think. To the point that if I want to context-switch to writing it takes a bit. I have to go for a walk or get a coffee or generally ‘reset’ my brain in some way.

I know flow states exist and that getting into one is the goal for most work and manage to do this sometimes, but the interesting part to me is that the brain configures itself for a particular task, and like an AI trained for one thing asked to do another, somehow becomes … less good? Or at least feels less good?

We get simulacra of this by trying to ask the AI to “write like Erik” but even so it somehow feels insufficient because it’s just not good. Our efforts to teach the AI to talk like us, through things like Reinforcement Learning through Human Feedback, also somewhat lobotomises the AI into being less useful.

We have to find another way to teach the AI how to think and how to speak, without those acting at cross purposes!

Considering that after McCarthy passed we don’t really have a literary icon left it almost feels as if we’re at the end of an age, watering down a skill honed over generations with pale imitations.

3. AI training is now everywhere, and utopia is around the corner. Synthesis school, the progressive school built on the principles of Ad Astra (Elon Musk’s school for his kids) has created an AI tutor.

I think AI tutors are amazing and will help a ton of kids and adults in their learning. They are, as advertised, infinitely patient and incredibly versatile and increasingly personalised. These are all excellent qualities in a teacher.

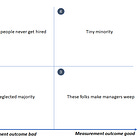

However, I’m going to be a bit contrarian. This, like the internet before, is likely to pay dramatically higher dividends to those who are motivated over those who are not.

4. Affirmative action is disaffirmed. The Supreme Court ruling took Harvard and other major universities to task in using race as a factor in their admissions, especially to help African Americans and disadvantage Asian Americans.

the U.S. Supreme Court’s conservative majority said that admissions policies that consider race as a factor are unconstitutional, overturning about 45 years of precedent

The dissent makes for fun reading, or at least skimming. Opinions here.

I can’t quite figure out how to think about this yet. On the one hand, parts of society are clearly underprivileged and helping them through a helping hand is a good thing to do. On the other hand, focusing on fairness and meritocracy is what our whole society is set up to do.

I’d written this essay a while back on the problems with using our methods of assessment to choose “the best” talent, no matter how you choose it. You say IQ, people start gaming those. You say SATs, same, and the privileged go to expensive tuition centers.

And considering this gamification exists, there’s no way to create a Veil Of Ignorance and give the best opportunities to those who deserve it and assign these in an individually fair and societally optimal fashion. Maybe a future AI can help figure this out.

Just so you know, my vantage point comes from a different place. I grew up until college in India, where you write multiple competeive exams after high school to get into a university. I wrote 6 such exams, one of the lower numbers amongst my peers.

And in most universities there were quotas - for Scheduled Castes and Tribes (call it maybe Indigenous folk in Western parlance?), for other minorities, for women - to the point for some > 50% of the seats were literally unavailable to me.

Of course I felt this was the greatest injustice. Later on I wondered if it was just after all, if we get to make a better society for others having privileged them an opportunity to try and get an education.

I have no answers, beyond that some amount of affirmative action is helpful and it then gets harmful, and I don’t know where the Laffer Curve for this lies. But the fact that it doesn’t get talked about much is frustrating.

As one of the better commentaries, here’s Noah Smith writing about affirmative action. It’s worth reading!

5 Michael Levin from Tufts University has a wonderful Nautilus article about him.

I love reading biological articles because it shows various complex ecosystems and how they work. Most of my working life has gone into figuring out our economic systems, growth of technologies and financial markets, and the best way I have found to understand that is through this metaphor.

Biological systems are exceptional at helping understand equilibria. Tyler Cowen had a list of such considerations from his recent trip to Kenya, and it’s worth contemplating.

You don’t necessarily need to jump in and apply the same equations to try and understand this either. Playing the glass-bead game is enough, to learn how to think a certain way. A good example is what Alex Komoroske does here in the presentation on coordination headwinds, showing how organisations are like slime molds.

6. $1.3 Billion rounds or bust, as seen by the raise from Inflection AI.

Tech is in such a weird place. Most companies are cutting their employees to the bone while some companies are raising insane sums of money to spend it almost entirely on Nvidia GPUs. I don’t know if it’s the new internet or the new oil or the new mobile or the new whatever, but the fact that there is this cognitive dissonance must be what’s driving everyone insane.

I also like the fact that 1.3 Billion is the funding EU is providing for Digital Europe Programme for Europe’s digital transition and cybersecurity.

The main work programme worth €909.5 million for the period of 2023 - 2024 covers the deployment of projects that use digital technologies such as data, AI, cloud, advanced digital skills. These projects will deliver concrete benefits for innovation ecosystems, open standards, SMEs, cities, public services, and the environment.

Alongside the main work programme, the Commission published a work programme focusing oncybersecurity, with a budget of €375 million for the period of 2023 - 2024 to enhance the EU collective resilience against cyber threats. This will be implemented by the European Cybersecurity Competence Centre.

I don’t know what that means, but if they have the same funding as an LLM startup I’m sure it’ll be great.

And in any case, I feel it’s another example of most people misunderstand the role of money. Training GPT-4 cost like $100m, which is a lot, and that’s why people think OpenAI has a commanding lead.

On the other hand they did it with like 200 people and it only cost $100m. Which is nothing! Well, not nothing, if you were to give me even a hundredth of that I’d love it, but WeWork raised 100x the amount to reinvent buildings with wifi. Most large companies spend more than this on stationery.

Capital is never enough to create a moat. What you need is actual knowhow on how to use the money. You could go the crypto route and use it for yacht parties but generally speaking the AI folk seem to be much too geeky to go for that sort of thing. Plus, you know, they might actually be building god.

Other notes: A company I’ve angel invested in, Consensus, which I also use shamelessly for any academic research, is looking for a full stack engineer. Here’s the role. Christian and Eric are great, and I can recommend checking them out.

“writing to me isn’t separable from thinking, and in fact seems to be a very particular form of thinking which is different to all others.”

This is why I think AI taking over writing is overstated. It is the forms of writing that *dont* constitute thinking that are most under threat

The bit of the Hoel post I could see annoyed me because 1. he was reading way too much into it and 2. he misinterpreted some of it anyway. Actually not everything the guardian publishes is original journalism - like most papers, they pay for wire copy from services like Reuters, the Press Association etc. I think the point of the bit he highlights is to say they won't use AI to mass-generate filler.

It just seemed like someone who was pro-Butlerian jihad was interpreting a fairly anodyne statement in the most negative possible light.

Disclosure: I work there (not in journalism, or in the working group mentioned in the statement)