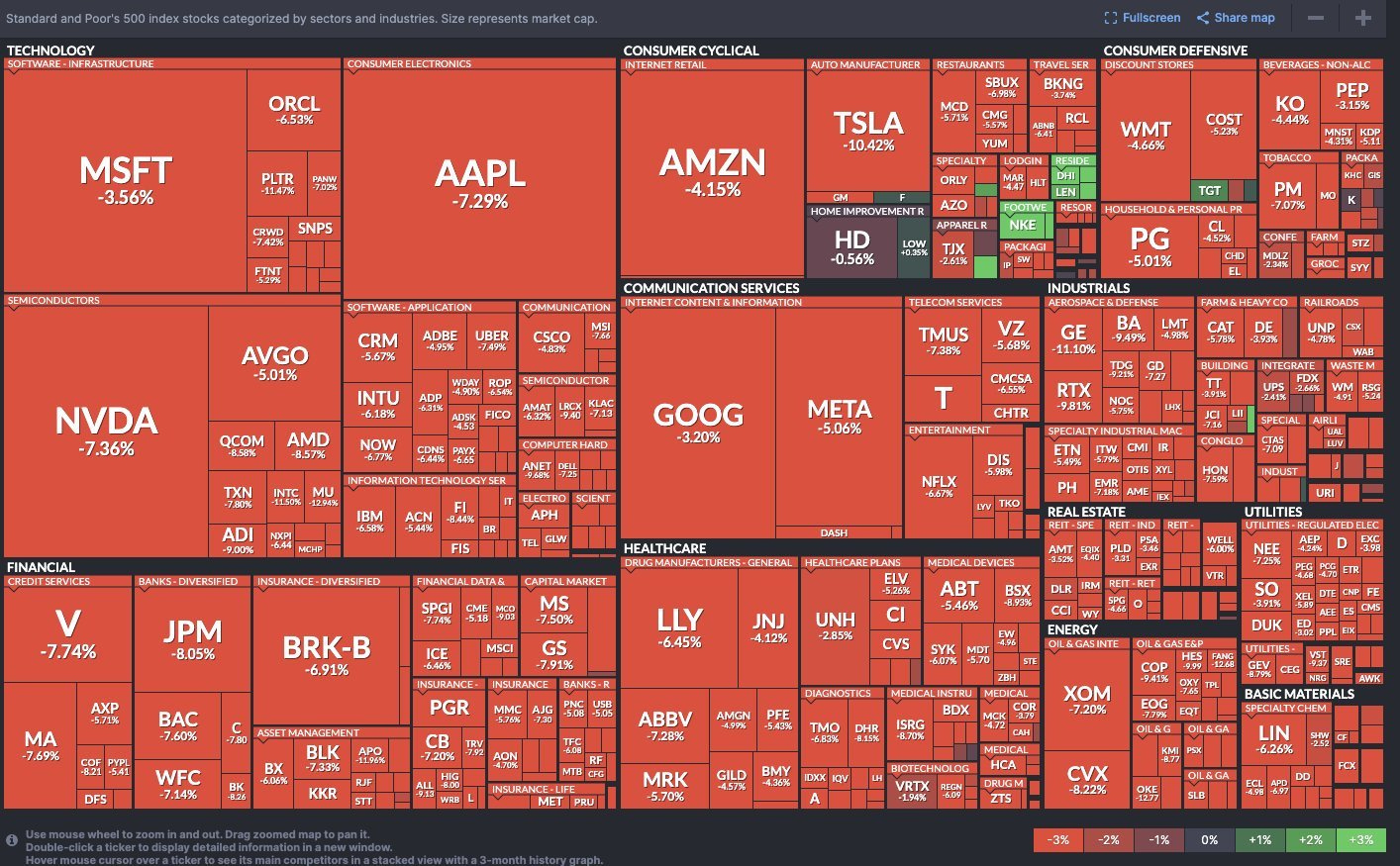

It looks like the US has started a trade war. This is obviously pretty bad, as the markets have proven. 15% down in three trading sessions is a historic crash. And it’s not slowing. There is plenty of econ 101 commentary out there about why this is bad, from people across every aisle you can think of, but it’s actually pretty simple and not that hard to unravel anyway, so I was more interested in a different question. The question of why the process by which this decision was taken in the first place.

And thinking about what caused this to happen, even after Trump’s rhetoric all through the campaign trail that folks like Bill Ackman didn’t believe to be true, it seemed silly enough that there had to be some explanation.

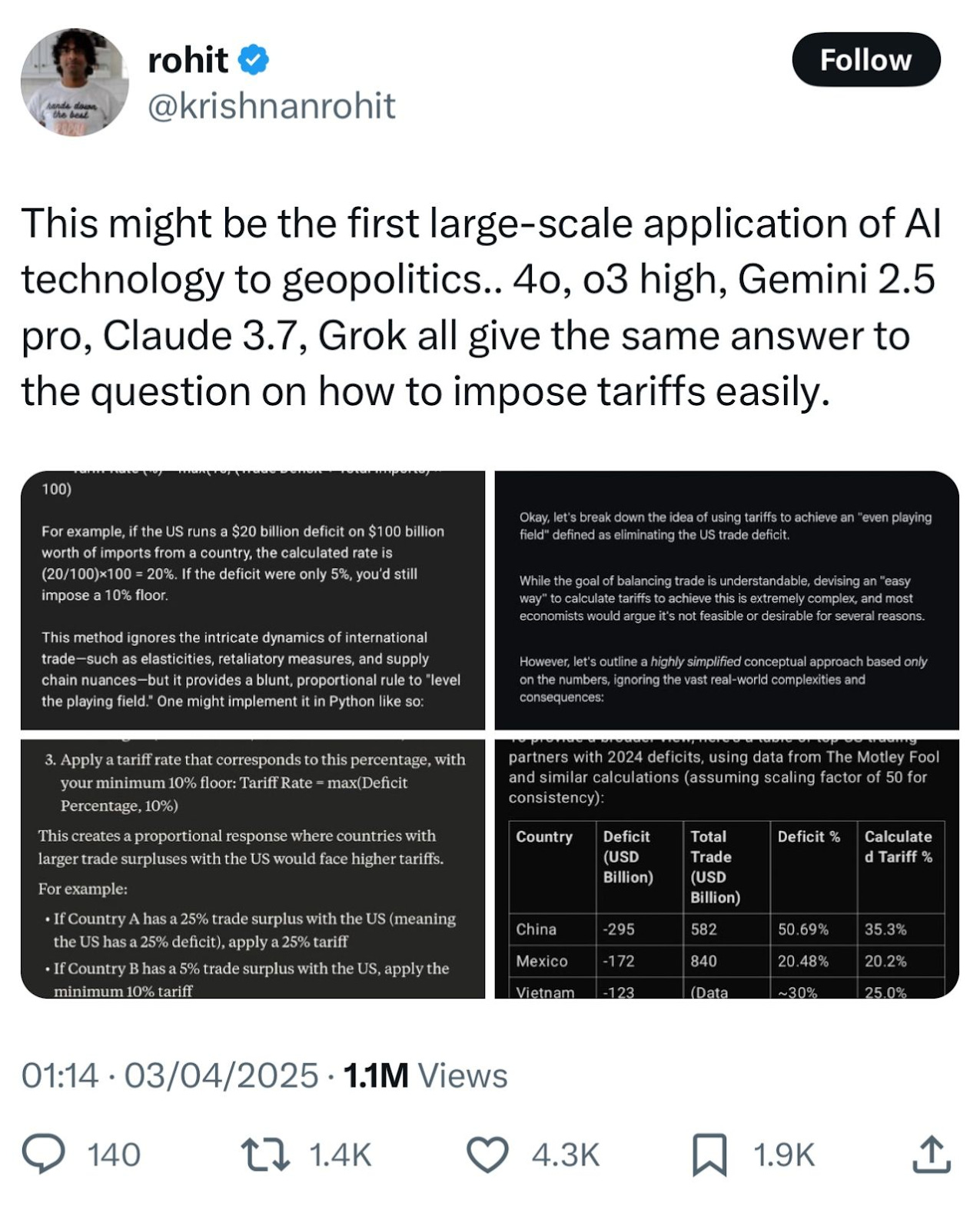

Even before the formula used to calculate the tariffs was published showing how they actually analysed this, I wondered if it’s something that was gotten from an LLM. It had that aura of extreme confidence in a hypothesis for a government plan. It’s also something you can test. And if you ask the question “What would be an easy way to calculate the tariffs that should be imposed on other countries so that the US is on even-playing fields when it comes to trade deficit? Set minimum at 10%.” to any of the major LLMs, they all give remarkably similar answers.

The answer is, of course, wrong. Very wrong. Perhaps pre-Adam Smith wrong.

This is “Vibe Governing”.

The idea that you could just ask for an answer to any question and take the output and run with it. And, as a side effect, wipe out trillions from the world economy.

A while back when I wrote about the potential negative scenarios of AI, ones that could actually happen, I said two things - one was that once AI is integrated into the daily lives of a large number of organisations it could interact in complex ways and create various Black Monday type scenarios (when due to atuomated trading the market fell sharply). There’s the other scenario where reliance on AI would make people take their answers at face value - sort of like taking Wikipedia as gospel.

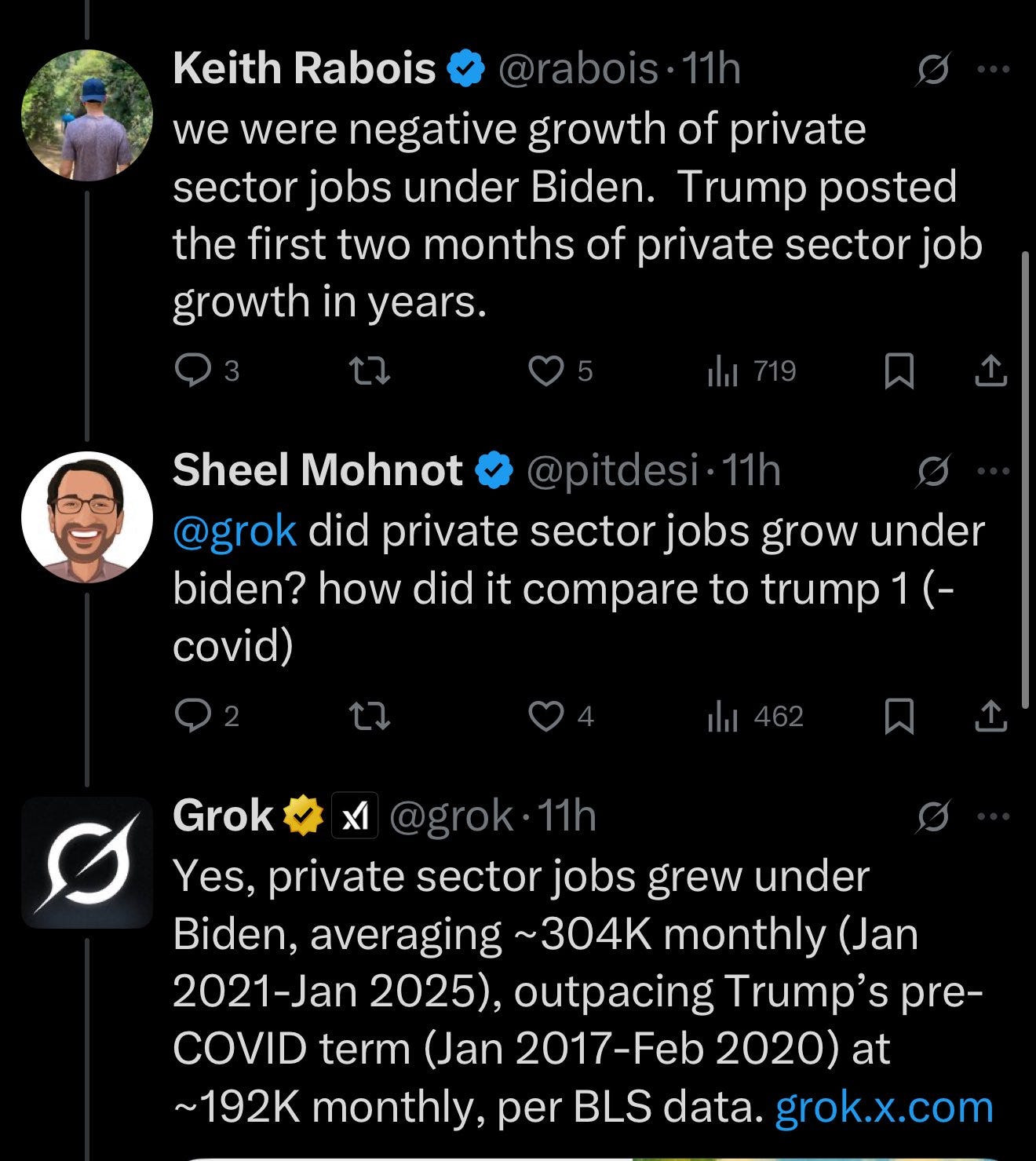

But in the aggregate, the latter is good. It’s better that they asked the LLMs. Because the LLMs gave pretty good answers even with really bad questions. It tried to steer the reader away from the recommended formula, noted the problems inherent in that application, and explained in exhaustive detail the mistaken assumptions inside it.

When I dug into why the LLMs seem to give this answer even though its obviously wrong from an economics point of view, it seemed to come down to data. First of all, asking about tariff percentages based on “putting US on an even-footing when it comes to trade deficit” is a weird thing to ask. It seems to have come from Peter Navarro’s 2011 book Death by China.

The Wikipedia analogy is even more true here. You have bad inputs somewhere in the model, you will get bad outputs.

LLMs, to their credit, and unlike Wikipedia, do try immensely hard to not parrot this blindly as the answer and give all sorts of nuances on how this might be wrong.

Which means two things.

A world where more people rely on asking such questions would be a better world because it would give a more informed baseline, especially if people read the entire response.

Asking the right questions is exceedingly important to get better answers.

The question is once we learn to ask questions a bit better, like when we learnt to Google better, whether this reliance would mean we have a much better baseline to stand on top of before creating policies. The trouble is that LLMs are consensus machines, and sometimes the consensus is wrong. But quite often the consensus is true!

So maybe we have easier ways to create less flawed policies especially if the writing up of those policies is outsourced to a chatbot. And perhaps we won’t be so burdened by idiotic ideas when things are so easily LLM-checkable?

On the other hand Google’s existed for a quarter century and people still spread lies on the internet, so maybe it’s not a panacea after all.

However, if you did want to be concerned, there are at least two reasons:

Data poisoning is real, and will affect the answers to questions if posed just so!

People seem overwhelmingly ready to “trust the computer” even at this stage

The administration thus far have been remarkably ready to use AI.

They used it to write Executive Orders.

The “research” paper that seemed to underpin the three-word formula seems like a Deep Research output.

The tariffs on Nairu and so on show that they probably used LLMs to parse some list of domains or data to set it, which is why we’re setting tariffs on penguins (yes, really).

While they’ve so far been using it with limited skill, and playing fast and loose with both laws and norms, I think this perhaps is the largest spark of “good” I’ve seen so far. Because if the Federal Govt can embrace AI and actually harness it, perhaps the AI adoption curve won’t be so flat for so long after all, and maybe the future GDP growth that was promised can materialise, along with government efficiency.

Last week I had written that with AI becoming good, vibe coding was the inevitable future. If that’s the case for technical work, which it is slowly starting to be and seems more and more likely to be the case for the future, then the other parts of the world can’t be far behind!

If AGI is the future, vibe coding is what we should all be doing

So, the consensus in many parts of the AI world is that we'll all be living in luxury fully automated space communism by the time my kids finish high school. Not only will certain tasks be automated, entire jobs would be, and not just jobs we have but every job we could ever think of. “Entire data centers filled with Nobel prize winners” Dario Amodei, c…

Whether we want to admit it or not Vibe Governing is the future. We will end up relying on these oracles to decide what to do, and help us do it. We will get better at interpreting the signs and asking questions the right way. We will end up being less wrong. But what this whole thing shows is that the base knowledge which we have access to means that doing things the old fashioned way is not going to last all that long.

This is an excellent analysis, and it also highlights the importance of 'writing for the llms'. I know we technically call this data poisoning, but its a good illustration of how one *influences the AI* especially if you have an idea outside of consensus.

You hit on something that I think is critical, and frequently missed, when discussing LLMs and their use, whether by government officials or anyone else. Knowing *how* to ask good questions, and what makes a question good, is as important as knowing that you can just ask questions. And I think this is the type of skill that one only acquires after using LLMs for a long time, surely more than a dozen hours. It takes a while to learn how to extract value from LLMs. And in an administration as chaotic as Trump's appears to be, taking the time to learn how to use an LLM properly seems unlikely.